How to prepare unstructured data for AI and analytics in Databricks

Large data lakes typically house a combination of structured and unstructured data. As the amount of unstructured data grows, you need a way to effectively prepare and analyze your unstructured datasets.

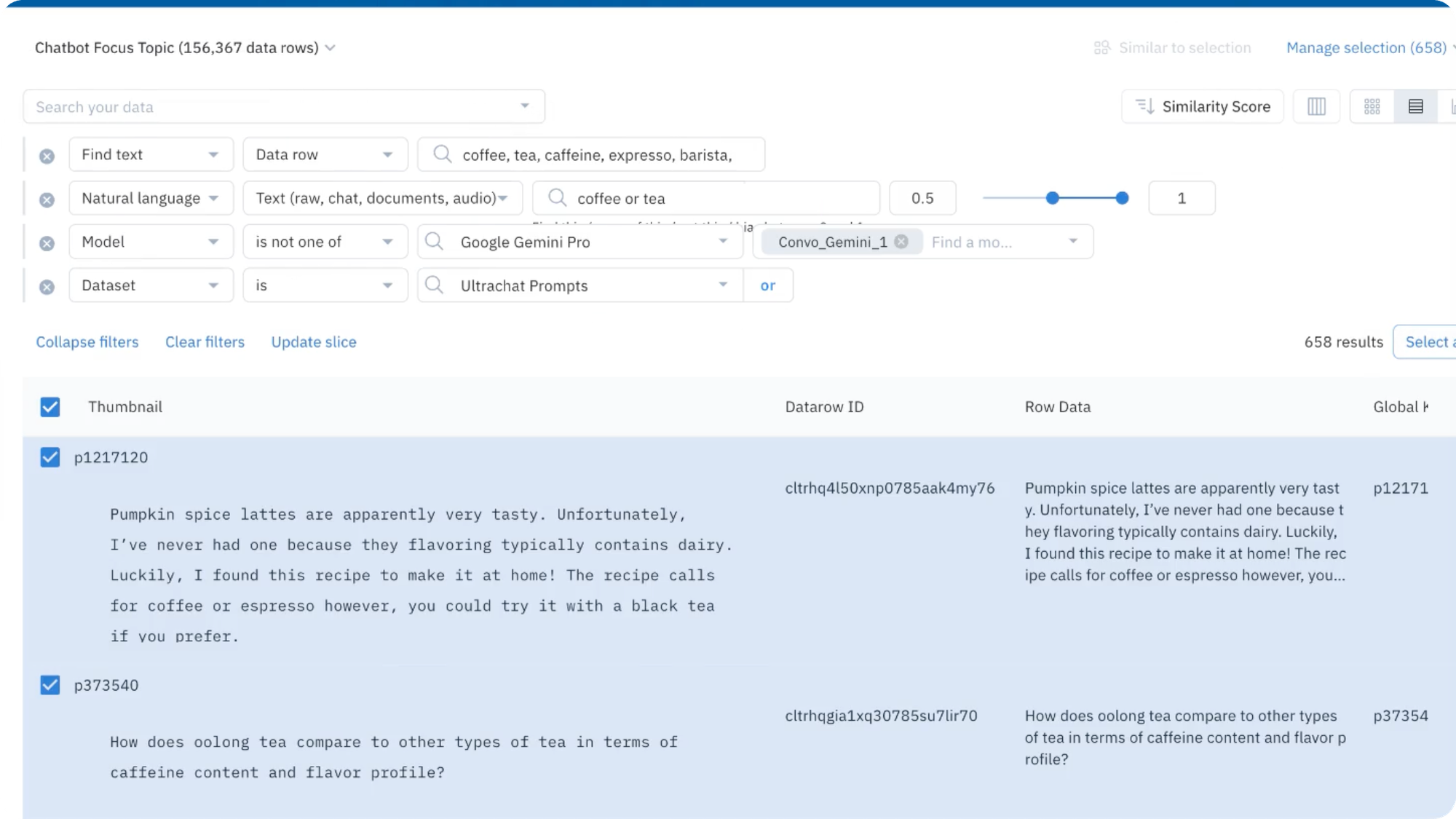

The Databricks and Labelbox partnership gives you an end-to-end environment for unstructured data workflows – a query engine built around Delta Lake, fast annotation tools, and a powerful machine learning compute environment. With the Labelbox Connector for Databricks and the LabelSpark API, you can easily integrate the two platforms and add structure to your data.

Start with unstructured data in your data lake, pass it to Labelbox for annotation, and load your annotations into Databricks for analysis.

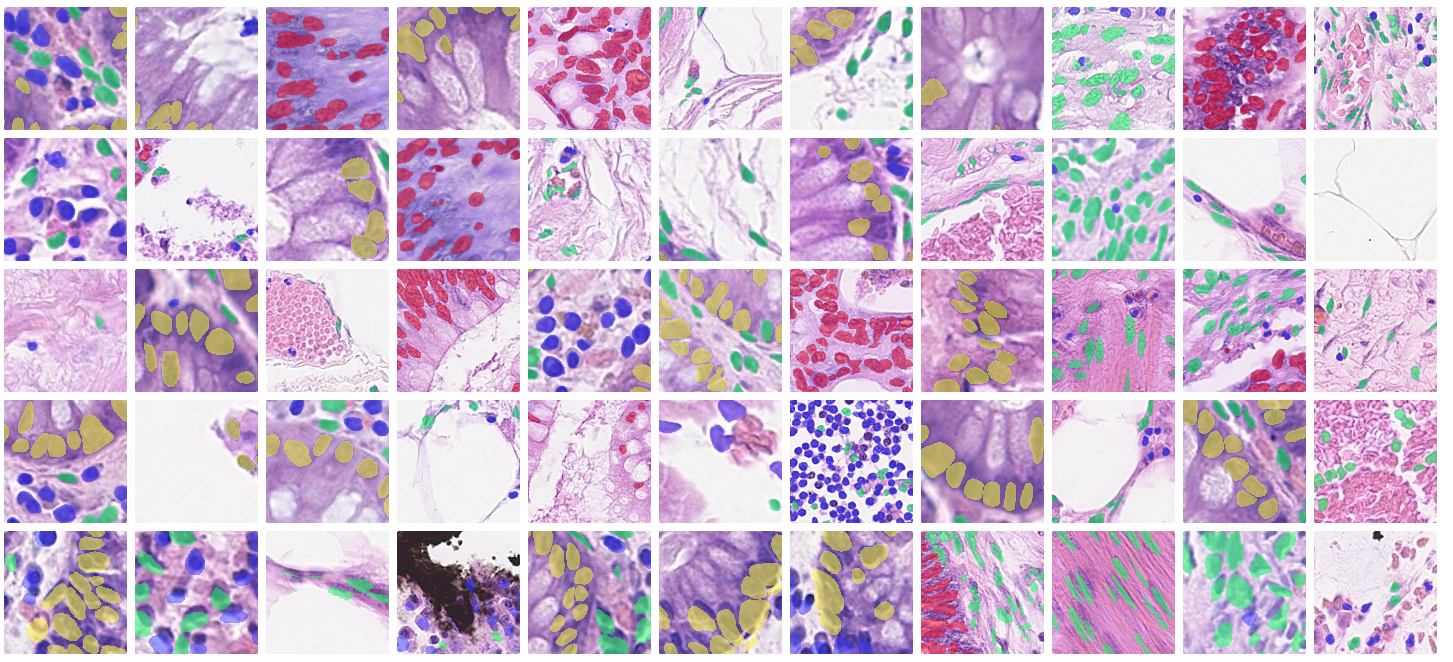

Labelbox empowers you to quickly explore, organize, and annotate a variety of unstructured data from your data lake. You can apply insights to modalities such as images, video, text, and geospatial tiled imagery.

What will you learn in this guide?

In this guide, you’ll learn how you can access the Labelbox Connector for Databricks to prepare unstructured data for AI and analytics. This includes:

- Requirements to get started

- How to create data rows with the Labelbox Connector for Databricks

- How to create data rows with metadata, annotations, and attachments

Check out this end-to-end video demo of this workflow.

Requirements to get started:

You can find the necessary requirements for all data rows in this notebook.

Requirements:

- A

row_datacolumn — This column must be URLs that point to the asset to-be-uploaded - Either a

dataset_idcolumn or an input argument fordataset_id - If uploading to multiple datasets, provide a

dataset_idcolumn - If uploading to one dataset, provide a

dataset_idinput argument (This can still be a column if it's already in your dataframe)

Recommended:

- A

global_keycolumn - This column contains unique identifiers for your data rows

- If none is provided, will default to your

row_datacolumn - An

external_idcolumn - This column contains non-unique identifiers for your data rows

- If none is provided, will default to your

global_keycolumn

Optional:

- A

project_idcolumn or an input argument forproject_id - If batching to multiple projects, provide a

project_idcolumn - If batching to one project, provide a

project_idinput argument (This can still be a column if it's already in your dataframe) - A row_data column - this column must be URLs that point to the asset to-be-uploaded

- A dataset_id column or an input argument for dataset_id

- If uploading to multiple datasets, provide a dataset_id column

- If uploading to one dataset, provide a dataset_id input argument

Creating data rows with the Labelbox Connector for Databricks

Once you have set up the above requirements, you can create data rows with the Labelbox Connector for Databricks.

Follow the steps outlined in this notebook to create data rows in Labelbox.

An end-to-end demo: Creating data rows with metadata, annotations, and attachments with the Labelbox Connector for Databricks

In addition to being able to create data rows with this connector, you can create data rows with attributes such as metadata, annotations, and attachments.

Follow the steps in this notebook to learn more about the requirements for creating metadata, annotations, and attachments and the steps needed to create data rows with all three attributes in Labelbox.

You can also refer to the below links for specific instructions on how to:

If you have questions or encounter issues with the Labelbox Connector for Databricks, please reach out to Labelbox Support.

In the meantime, you can learn more about how Burberry is harnessing the power of Labelbox and Databricks to curate their strategic marketing assets.

All guides

All guides