Open AI o3 mini

OpenAI o3-mini is a powerful and fast model that advances the boundaries of what small models can achieve. It delivers, exceptional STEM capabilities—with particular strength in science, math, and coding—all while maintaining the low cost and reduced latency of OpenAI o1-mini.

Intended Use

OpenAI o3-mini is designed to be used for tasks that require fast and efficient reasoning, particularly in technical domains like science, math, and coding. It’s optimized for STEM (Science, Technology, Engineering, and Mathematics) problem-solving, offering precise answers with improved speed compared to previous models.

Developers can use it for applications involving function calling, structured outputs, and other technical features. It’s particularly useful in contexts where both speed and accuracy are essential, such as coding, logical problem-solving, and complex technical inquiries.

Performance

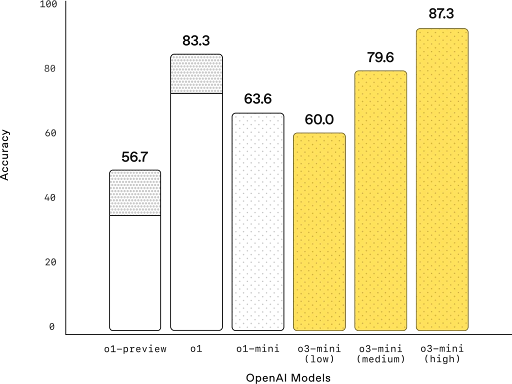

OpenAI o3-mini performs exceptionally well in STEM tasks, particularly in science, math, and coding, with improvements in both speed and accuracy compared to its predecessor, o1-mini. It delivers faster responses, with an average response time 24% quicker than o1-mini (7.7 seconds vs. 10.16 seconds).

In terms of accuracy, it produces clearer, more accurate answers, with 39% fewer major errors on complex real-world questions. Expert testers preferred its responses 56% of the time over o1-mini. It also matches o1-mini’s performance in challenging reasoning evaluations, including AIME and GPQA, especially when using medium reasoning effort.

Limitations

No Vision Capabilities: Unlike some other models, o3-mini does not support visual reasoning tasks, so it's not suitable for image-related tasks.

Complexity in High-Intelligence Tasks: While o3-mini performs well in most STEM tasks, for extremely complex reasoning, it may still lag behind larger models.

Accuracy in Specific Domains: While o3-mini excels in technical domains, it might not always match the performance of specialized models in certain niche areas, particularly those outside of STEM.

Potential Trade-Off Between Speed and Accuracy: While users can adjust reasoning effort for a balance, higher reasoning efforts may lead to slightly longer response times.

Limited Fine-Tuning: Though optimized for general STEM tasks, fine-tuning for specific use cases might be necessary to achieve optimal results in more specialized areas.

Citation

https://openai.com/index/openai-o3-mini/

All models

All models