Use case

Multimodal reasoning

Unlock a new generation of AI capabilities by generating high-quality data and performing expert-led human evaluations to train your models on text, images, video, and audio data

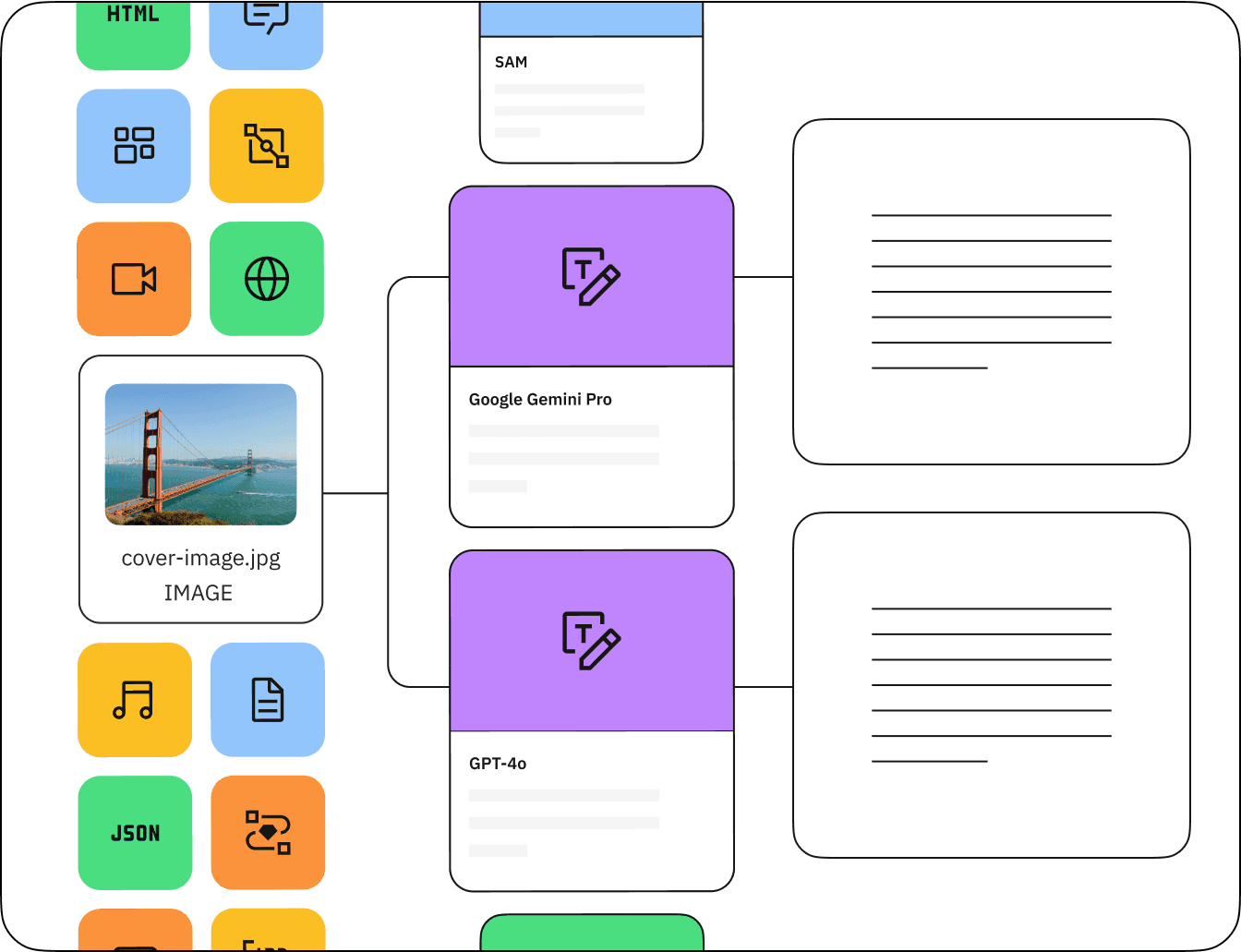

Why Labelbox for multimodal reasoning

Increase data quality

Generate high-quality data by combining advanced tooling, humans, AI, and on-demand services in a unified solution.

Customize evaluation metrics

Tailor your workstream to evaluate code functionality, style, efficiency, security or more with custom annotations.

Access coding experts on-demand

Access coding experts to provide tailored support, from evaluating code correctness to enforcing style guidelines.

Collaborate in real-time

Enjoy direct access to internal and external labelers with real-time feedback on labels and quality via Labelbox platform.

Understanding the multimodal landscape

Multimodal reasoning represents a significant leap forward in AI use cases, enabling machines to comprehend the world through a combination of text, images, video, and audio. This capability opens the doors to new virtual assistants, content creation, education platforms, and more.

Challenges of multimodal reasoning

Building effective multimodal AI models requires diverse datasets that encompass a wide range of modalities. However, collecting, annotating, and managing such data can be complex and time-consuming without the right tools or human experts available to capture the nuances of audio, video, and images.

Build next-generation AI with Labelbox

Labelbox has a long history of supporting complex image, text, audio, and video labeling with our industry-leading software. Our platform enables seamless collaboration, efficient annotation workflows, and real-time quality control to help you build state-of-the-art multimodal models.

Customer spotlight

Labelbox's intuitive tooling coupled with post-training labeling services offered a collaborative environment where Speak's internal team, along with external data annotators, could work together seamlessly. Learn more about how Speak uses Labelbox to improving the quality and efficiency of their data labeling.

Learn more >