Labelbox•July 24, 2025

Teaching agents to use tools with human supervision: MCP support now available

AI agents are increasingly capable of executing real-world tasks by interacting with tools and systems. Whether it’s a customer support bot or a productivity assistant, modern agents rely on tool use, multi-step reasoning, and human input to be effective. This emerging paradigm requires agents not only to generate accurate responses but also to act within complex environments, making judgment calls, adapting to context, and handling ambiguity much like a human assistant would.

However, these agents remain fragile and can easily fail on tasks that are trivial for humans. To build robust, generalizable systems, we need human-in-the-loop evaluation data: grounded assessments of whether an agent completed a task successfully, along with targeted corrections when it fails.

That’s where Labelbox’s MCP support comes in. Our multimodal chat (MMC) editor now integrates with an MCP server, allowing AI teams to inspect, label, and edit agent-tool interactions in context. This makes it easier to evaluate whether agents are using tools correctly, identify failure modes, and apply targeted corrections, all within a single, streamlined workflow.

Why tool integration matters?

Tool use allows LLMs to overcome inherent limitations such as poor arithmetic, outdated knowledge, or lack of system access. It also unlocks new workflows where agents can:

- Query live databases

- Trigger APIs and services

- Interact with user interfaces

But to evaluate these behaviors effectively, we need annotated examples of tool use, including:

- Whether the agent used the correct tool

- Whether tool inputs were valid

- Whether the outcome met task expectations

Until now, labeling such interactions was difficult. Labelbox changes that by by seamlessly integrating tool-calling into the core annotation workflow, enabling precise evaluation and human feedback for tool-augmented agents.

Integrating agentic tools into Labelbox

Setting up an MCP server

Labelbox uses the MCP protocol to connect to external tools. You can easily set up a server using FastMCP, a Python library for defining tools.

Here’s an example of a simple tool that multiplies two numbers:

from fastmcp import FastMCP

mcp = FastMCP("Demo 🚀")

@mcp.tool

def multiply(a: float, b: float) -> float:

"""Multiply two numbers"""

return {"result": a * b}

if __name__ == "__main__":

mcp.run(transport="http", port=3131)While multiplication is trivial for humans, it's a known weak spot for LLMs—making it a great candidate for a MCP-based tool. Tools like this can also simulate business systems, retrieve information, or execute code.

Once your server is running, deploy it to a publicly accessible endpoint so that Labelbox can call it.

Configure your Labelbox project

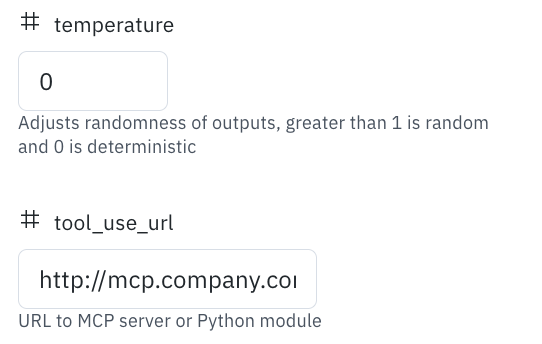

Our multimodal chat (MMC) editor allows you to configure the model you want to use for labelling and its parameters. You can set the "tool_use_url" parameter to point to the MCP server you want to use.

Labelling data for tool use

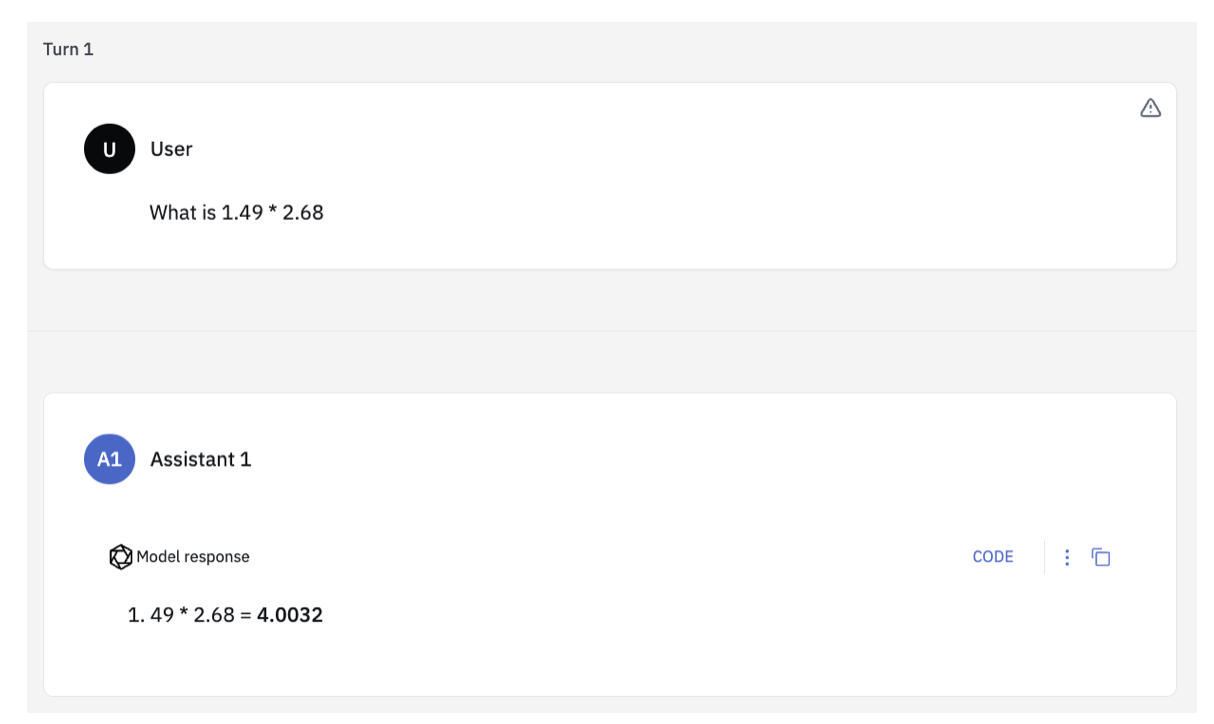

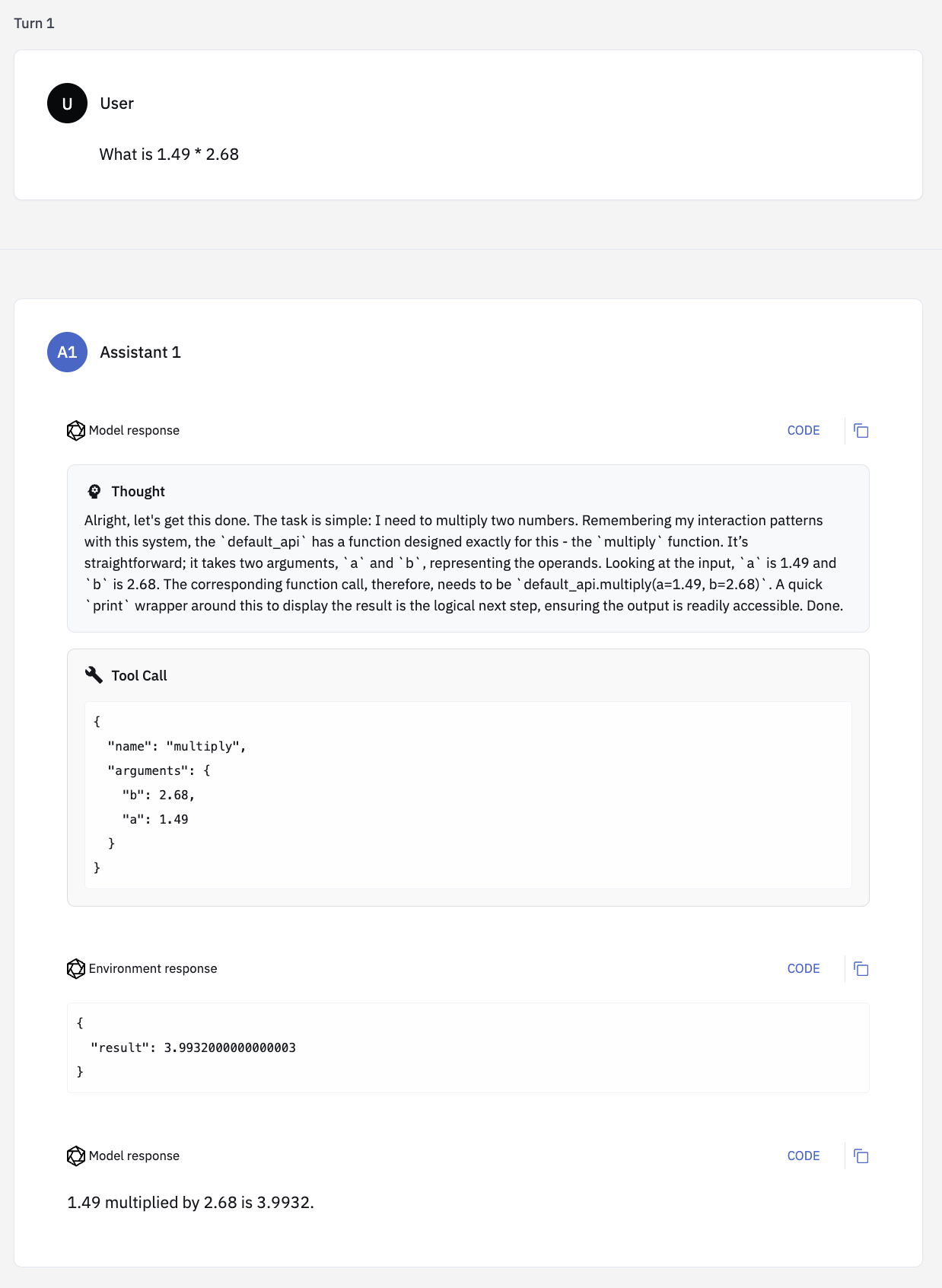

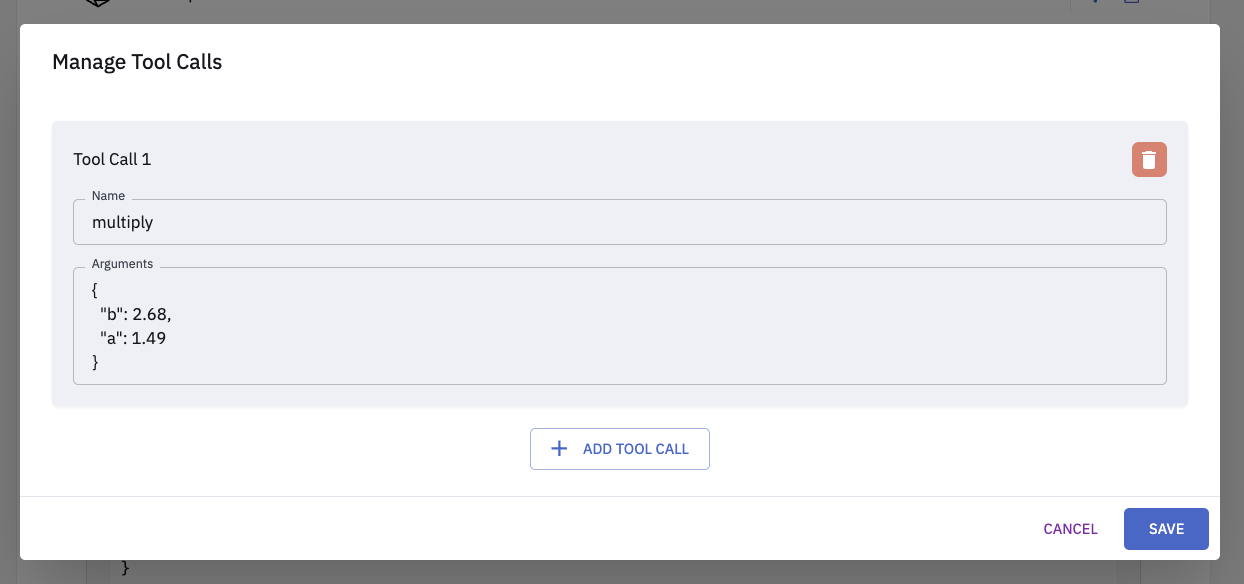

Let’s revisit our multiplication example:

Without tools: The MMC editor queries the LLM directly. Since LLMs are unreliable at arithmetic, the response may be incorrect.

With tools: With the MCP integration, the LLM can invoke a tool (e.g., multiply) and return the correct result.

Editing trajectories

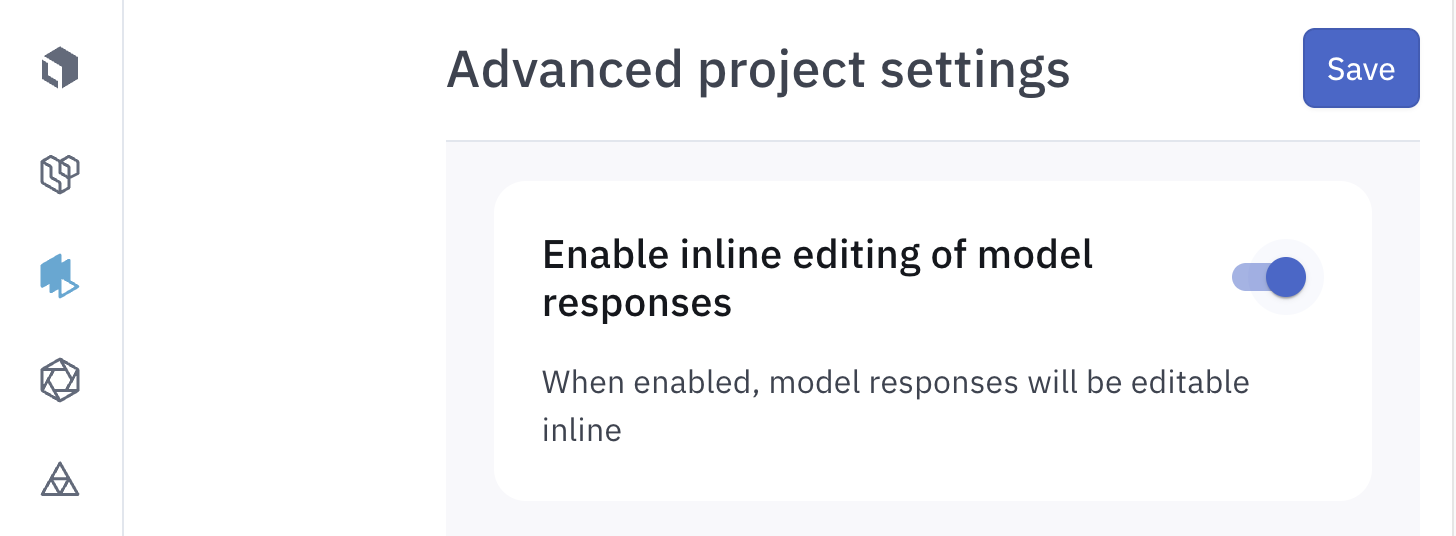

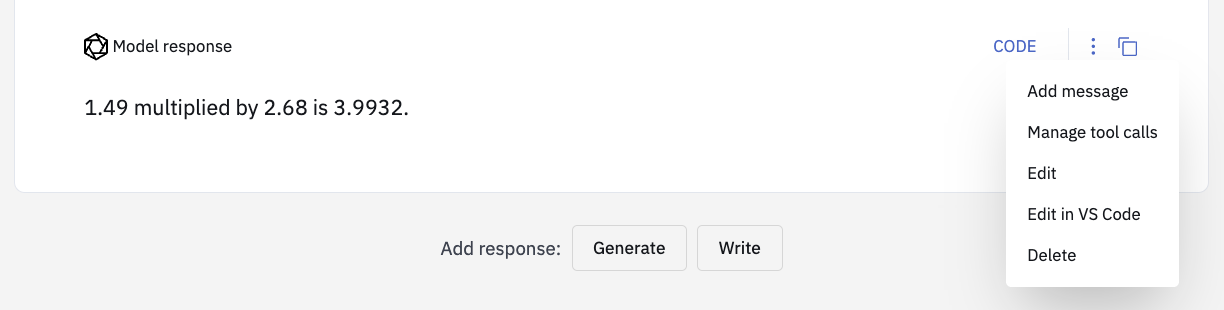

The multimodal chat editor also lets you correct model mistakes and create high-quality, ground truth trajectories which is ideal for training and fine-tuning. You can edit both tool calls and model responses to reflect the desired agent behavior. This feature can be enabled via the Advanced Settings tab for the project.

Enabling the above toggle adds new menu options to each message.

When an incorrect tool call occurs, you can update the tool used and adjust its input arguments to ensure the correct behavior is captured.

Getting started

As agents evolve to handle more complex, tool-based workflows, we must evolve how we evaluate and supervise them in order to accurately capture human intent and preferences. Our MCP integration in the multimodal chat editor transforms Labelbox into a powerful platform for interactive, tool-aware agent assessment.

Whether you’re building agents for customer support, enterprise workflows, or AI research, this feature helps you move from static data labeling to interactive agent evaluation.

Curious to try this out or integrate it into your existing workflows? We'd love your feedback and please reach out to us and we’d love to help.

All blog posts

All blog posts