Labelbox•August 5, 2025

Introducing Labelbox Evaluation Studio: Drive AGI advancements with real-time feedback on model performance

AI teams have historically relied on static benchmarks and fragmented, ad-hoc testing workflows. These methods often overlook the full range of capabilities that next-gen multimodal models must demonstrate, such as understanding context across different modalities (specifically for audio, video and images), and generating coherent responses that integrate information from multiple sources. To truly advance the latest models, you need continuous, real-time insights into how your system behaves across edge cases, how it compares to leading alternatives, and how each new version stacks up against your previous best.

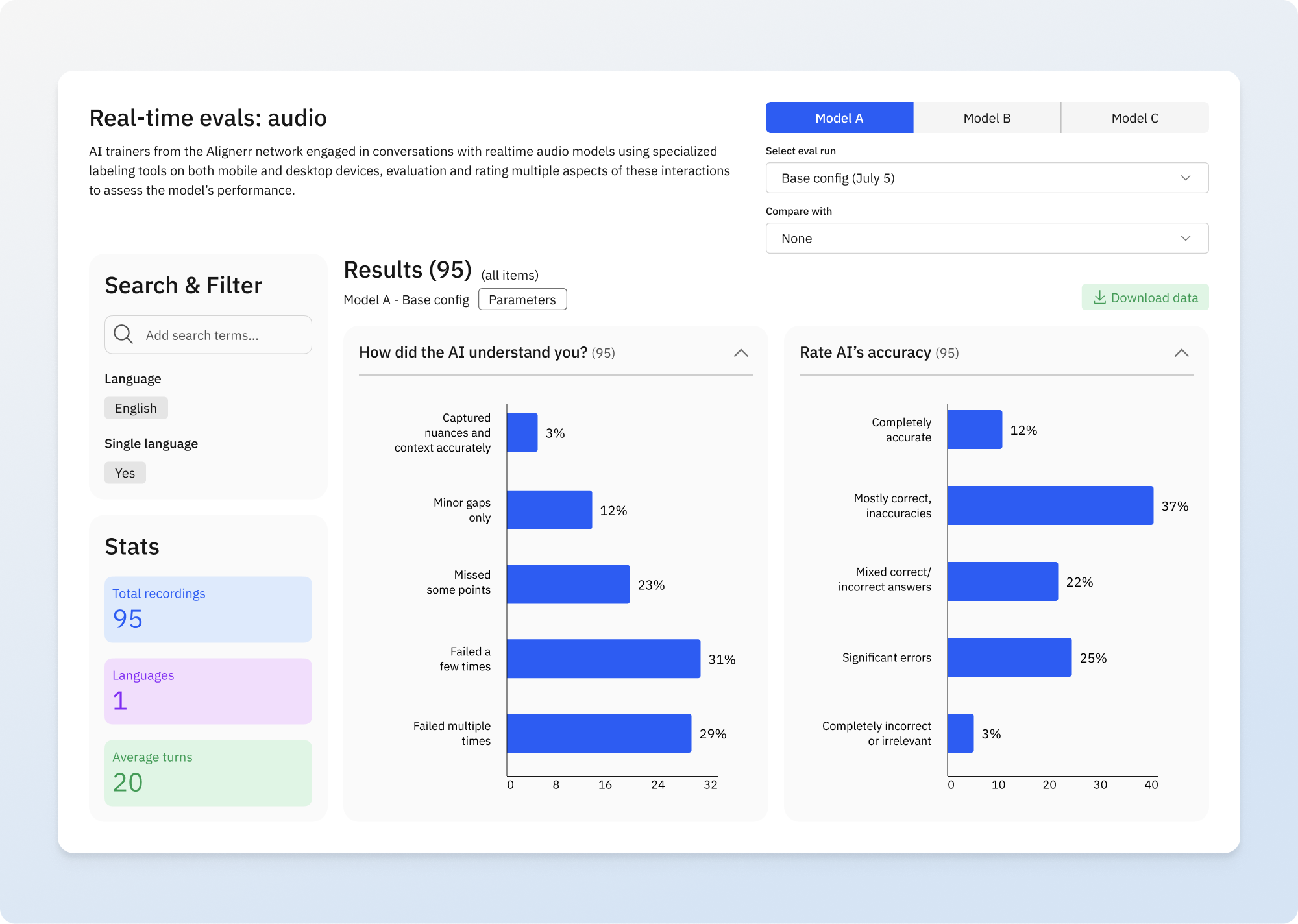

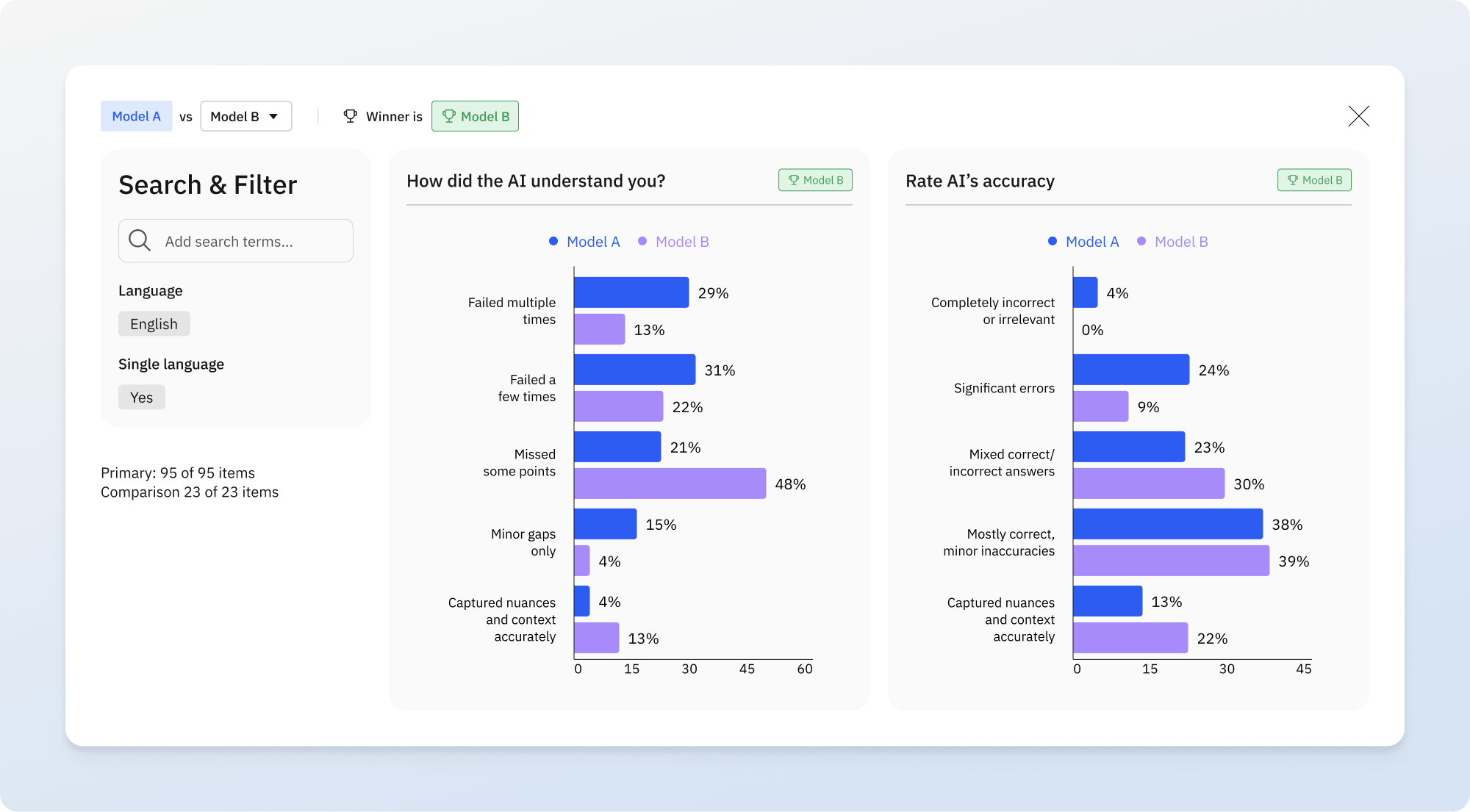

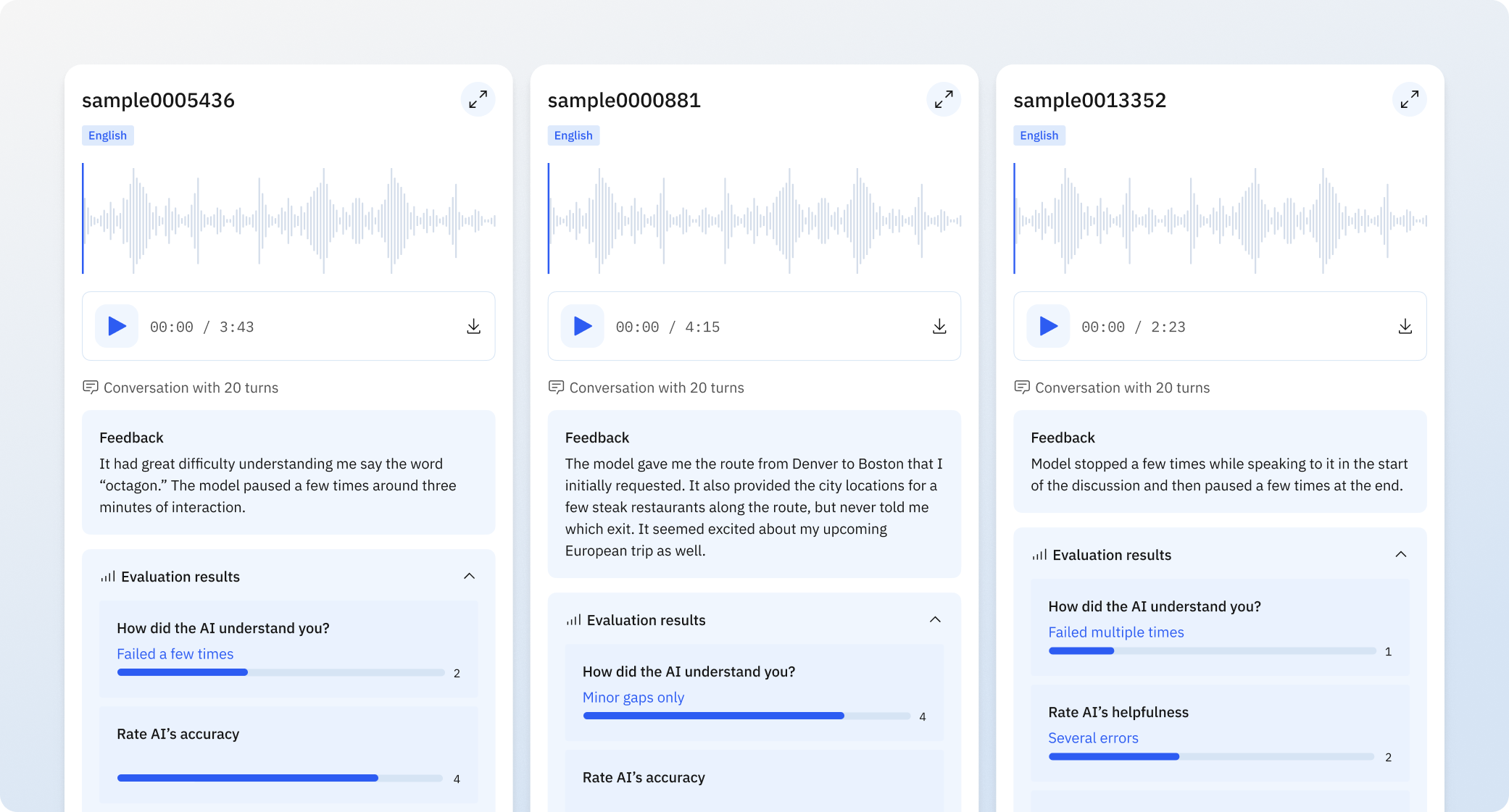

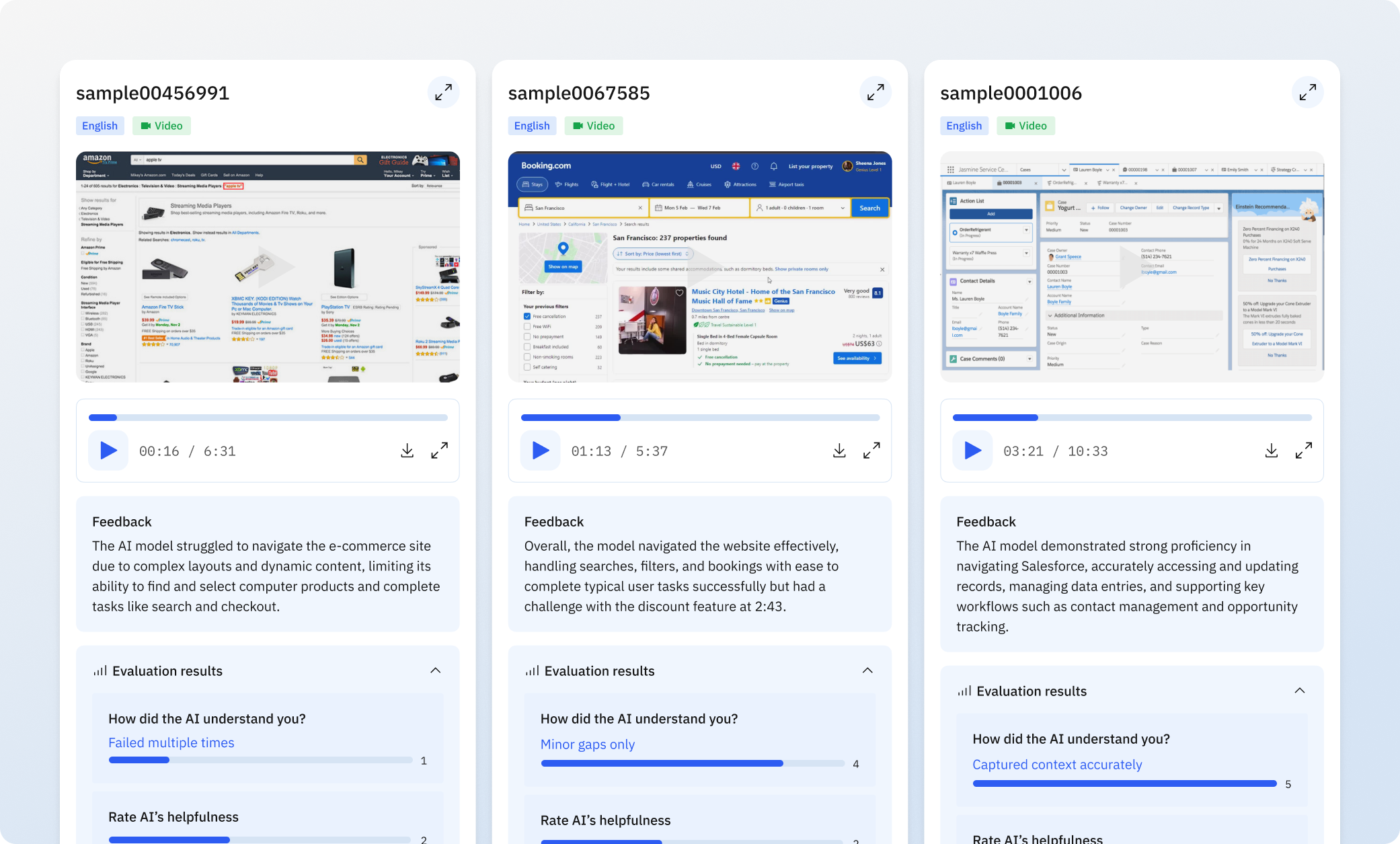

That's why we're excited to announce the Labelbox Evaluation Studio, a private, real-time evaluation platform built for AI labs and model development teams. With tailored comparative insights across diverse domains, it enables frontier AI teams to quickly uncover strengths, weaknesses, and areas for targeted improvement.

Where evaluation meets iteration

The Labelbox Evaluation Studio was born out of our close partnerships with leading AI labs. In daily conversations with researchers and engineers, one challenge came up again and again: “how does this version of our model compare to other leading models?” and “how can expert AI trainers and evaluators quickly measure this and help us improve?” These teams wanted a reliable, real-time way to benchmark their models and share insights across their organization.

Through this collaboration, we realized that a dynamic, interactive tool was the solution researchers needed most. Instead of one-off evaluations, they wanted an always-available console where they could run tests, see comparative results, and immediately act on expert feedback to improve their models. Evaluation Studio was designed to meet this need and is optimized for the way frontier teams work.

Precision at speed: Derive insights in hours, not days or weeks

Labelbox Evaluation Studio is crafted to meet the pace at which frontier AI teams are expecting to deliver results. Teams can spin up a custom evaluation environment in as little as a day after we receive your evaluation data. This quick turnaround is possible thanks to our robust platform and seamless integrations, ensuring you get the critical insights you need, fast.

Our expansive network through Alignerr also gives us the ability to tap into diverse experts across numerous domains and 70+ countries. This global reach, combined with our technology, allows researchers to gain expert-driven insights into model performance across specialized evaluation metrics which help pinpoint exactly where their model is weak relative to others.

A collaborative approach to model excellence

In our work with leading research teams, we’ve found that the most effective use of Labelbox Evaluation Studio comes through close collaboration. Aligning on research objectives, evaluation methodology, and model behaviors enables a faster diagnosis of failure modes and more meaningful iteration. Here's how that process typically unfolds:

- You define the objective: Your team sets the direction, whether it's measuring reasoning ability, robustness, or emergent behaviors, and provides context around what matters most for your models.

- We provide the software and methodology: Labelbox works closely with you to design evaluation protocols tailored to your goals, drawing on our expertise and the Alignerr network to implement high-quality, custom metrics and analysis workflows.

- You iterate with real-time feedback: The result is a private, live leaderboard that surfaces immediate, actionable insights. You’ll be able to spot regressions, QA outputs, correct mislabeled data or prompts, and identify where additional data or targeted fine-tuning is needed.

This co-designed approach ensures that evaluation is not an afterthought but an integral part of your model development cycle, aligned with your research priorities and optimized for rapid iteration.

Transform your model development workflow

The benefits of Labelbox Evaluation Studio extend directly to your bottom line and development cycles:

- Improved model performance: Video-based evaluations have driven a 15% increase in model accuracy within three months for leading AI labs, powered by targeted insights from continuous benchmarking.

- Increased iteration speed: A leading voice model team achieved a 35% boost in iteration cycles, enabling faster testing and deployment of new versions.

- Faster and automated insight generation: Teams report 40% more actionable insights per week, accelerating data-driven decision-making.

Get started today

Labelbox Evaluation Studio is engineered to empower AI labs to rapidly deploy comprehensive, high-impact evaluations for their most-pressing models. We provide a white-glove approach, helping you ensure your data pipeline is clean so you can see your model's performance in real time.

Labelbox is the AI data infrastructure powering the world’s leading AI labs. From multimodal reasoning to computer use and agentic tasks, we provide the tools and expertise necessary to deliver frontier-grade data and evaluate models with speed and precision. Our full-stack data factory combines advanced software automation, reinforcement learning workflows, and a global network of experts across fields like STEM, coding, media, and language.

Ready to unlock the next level in model evaluation and accelerate your model development? Contact our team to access the Labelbox Evaluation Studio.

All blog posts

All blog posts