Labelbox•July 17, 2025

An economic report on the human expertise fueling frontier AI

As we edge closer to superintelligence, the conversation is often driven by discussions of novel model architectures, the global competition for GPUs, and sensational product launches. But beneath the surface of every frontier model lies a lesser-known story, one of a rapidly growing global workforce of AI trainers who are quietly shaping the future.

This new class of digital work demands specialized expertise. These are PhDs and masters in advanced sciences, Olympiad medalists in mathematics, software engineers who’ve shipped real-world systems, and clinicians with experience making critical health decisions. These individuals do more than label data; they teach and refine complex AI systems. As model intelligence increases, their judgement and insight become indispensable for further improvement.

We’re witnessing the rise of a hidden economy, a new kind of labor market where human intelligence is becoming one of the most prized assets. In this report, we’ll explore the emerging expert economy behind frontier AI: asking who these knowledge workers are, what diverse backgrounds they come from, how they are compensated, and why the complex data they generate is necessary for advancing frontier AI.

Who are the knowledge workers behind AI’s progress?

The shift from simple labeling to skilled judgment is transforming the frontier data economy

For years, AI systems were trained on large-scale datasets scraped from the internet and annotated at scale by crowdworkers. The work was often repetitive with modest compensation: tagging images, transcribing speech, correcting grammar, or checking facts. Now, the frontier has advanced.

Today's leading AI models must do more than just identify objects or complete sentences. They're now expected to reason, code, analyze their own thinking, and even question the information they're given. Developing these advanced capabilities relies on sophisticated techniques like reinforcement learning from human feedback (RLHF) and reinforcement learning with verifiable rewards (RLVR). These technical developments demand more sophisticated feedback, redefining the very profile of an AI trainer.

In this report, we draw on our proprietary data to examine the evolving AI training landscape. Labelbox’s network includes millions of domain experts across 70+ countries, covering specialties like math, law, medicine, engineering, language, and beyond. These expert AI trainers produce data, from reasoning chains to complex decision-making, that guides AI beyond just accuracy toward true usefulness, reliability, and safety.

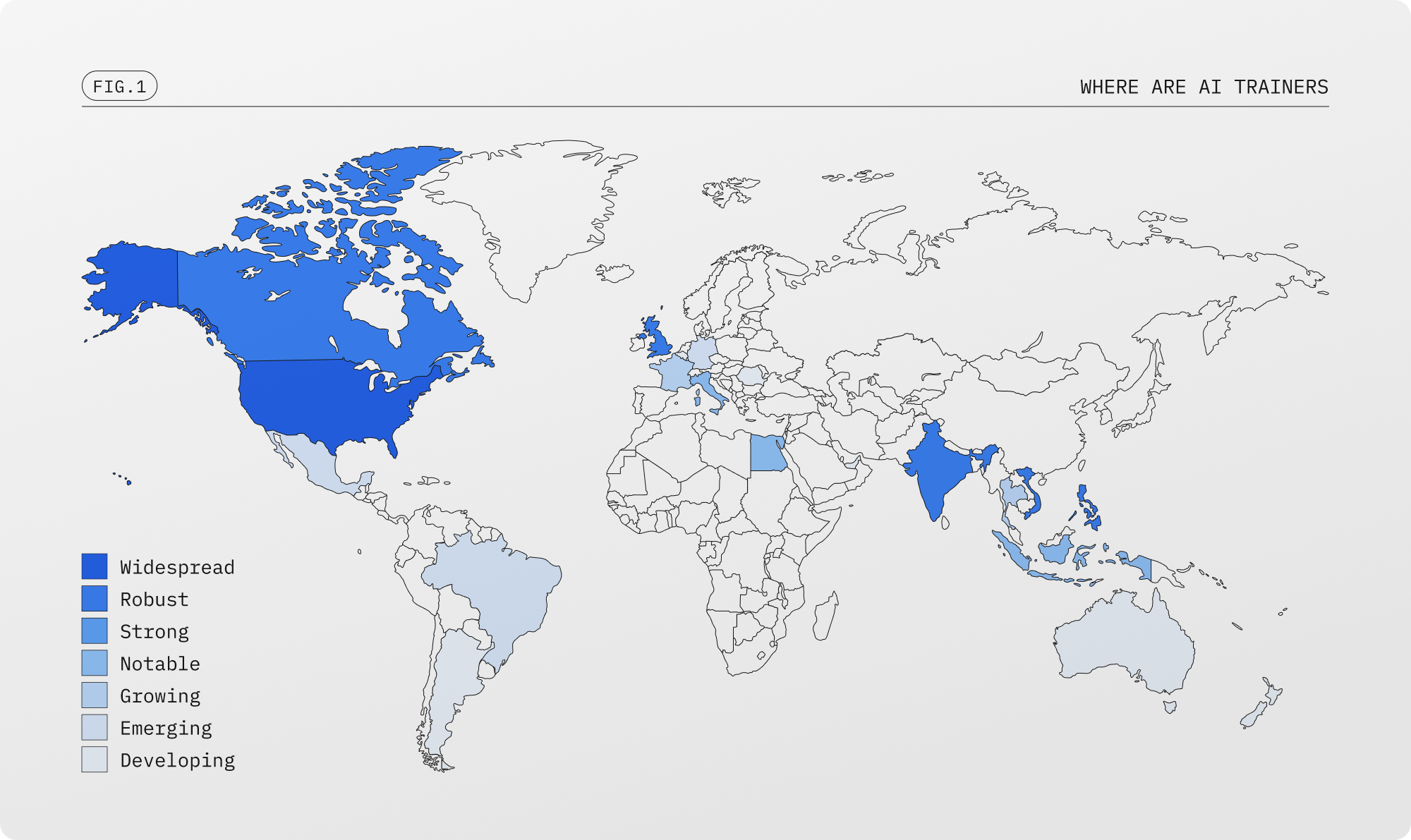

Where are these AI trainers based?

AI alignment runs on a global network of experts

Producing AI data is a truly global effort, with contributors spanning continents and bringing localized expertise to a rapidly expanding frontier. The United States leads in participation, a reflection of its mature AI ecosystem and high concentration of tech firms and research institutions. The United Kingdom is also heavily involved, leveraging its strong academic tradition and growing community of applied AI professionals. Countries like Canada, India, the Philippines, and Vietnam each demonstrate strong participation, signaling a combination of technical expertise, English fluency, and increasing investment in AI-related talent.

Beyond these leaders, there are several distinct groups of global participants. One, including countries like Italy, Egypt, and Indonesia, is distinguished by its valuable blend of technical and linguistic skills. Another, featuring France and Thailand, provides specialized contributions, particularly for multilingual AI training. Additionally, nations such as Brazil, Mexico, and Germany are developing into emerging hubs, where local talent is gaining momentum. Finally, there is a developing layer of participation in places like Belgium, the UAE, Australia, Romania, Argentina, and New Zealand, where infrastructure, policy, or access is just beginning to support sustained involvement in advanced AI data operations.

This global participation is driven by two forces: the demand for diverse perspectives in training and the scale of human capital required to guide AI's development.

What educational backgrounds do they come from?

AI trainers bring rigor from top universities and specialized programs

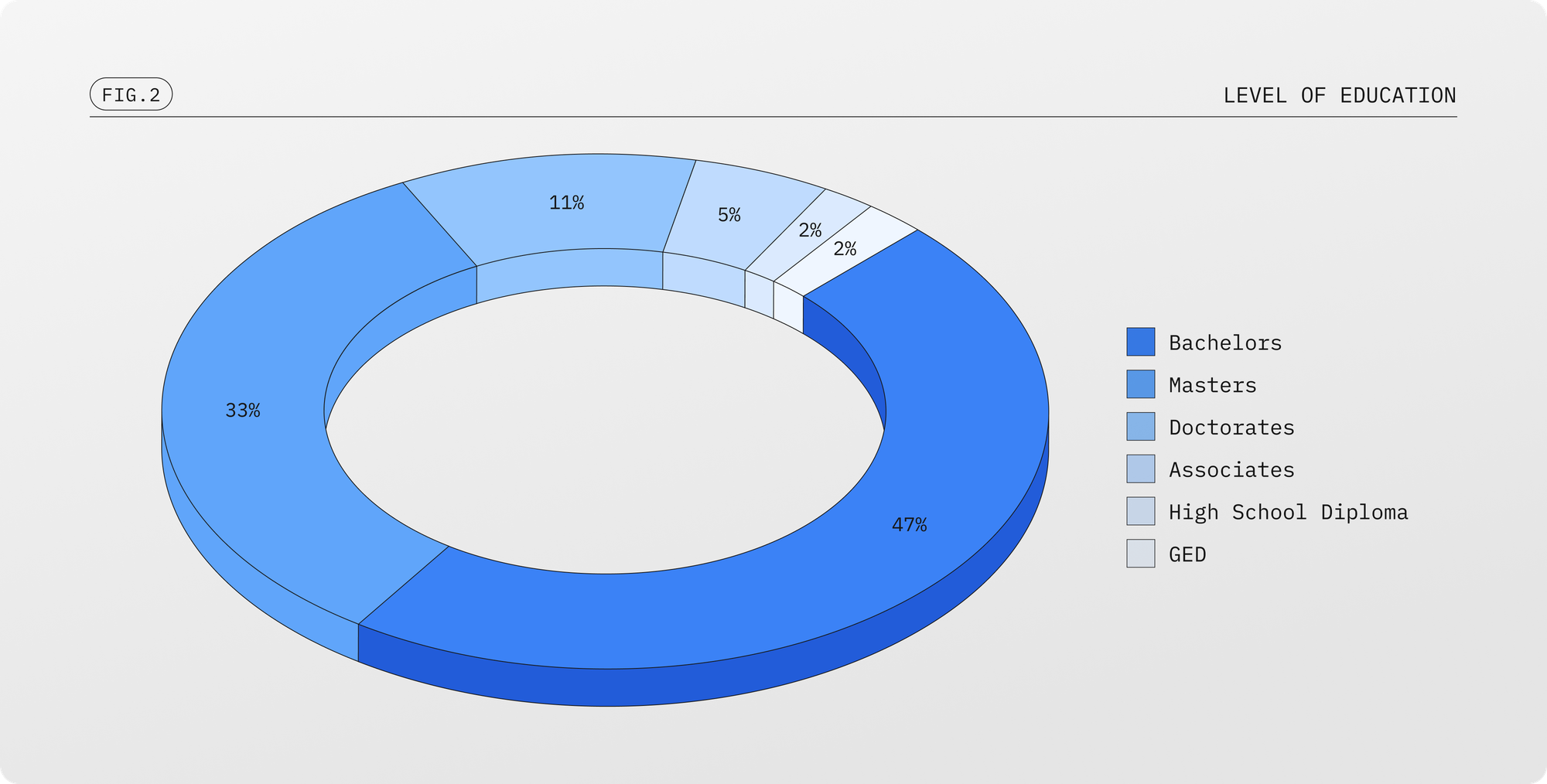

The specialized expertise required to train these AI systems is directly reflected in the educational attainment of the contributors. A combined 80% hold at least a bachelor’s degree, with nearly 47% having completed undergraduate studies and a significant 33% holding master's degrees.

Notably, 11% of contributors hold doctorates (PhDs), and we only expect this number to rise. Among contributors with advanced degrees, there is strong representation from the majority of the top 50 global institutions, including Harvard, MIT, Oxford, Cambridge, ETH Zurich, and the Indian Institutes of Technology (IITs). To review domain-specific content, refine multi-step outputs, and evaluate reasoning chains demands insight and that is evidenced by this high concentration of advanced degrees.

While fewer in number, individuals with associate degrees (5%), high school diplomas (2%), or GEDs (2%) still play a meaningful role in operational workflows or in testing AI systems for general usability.

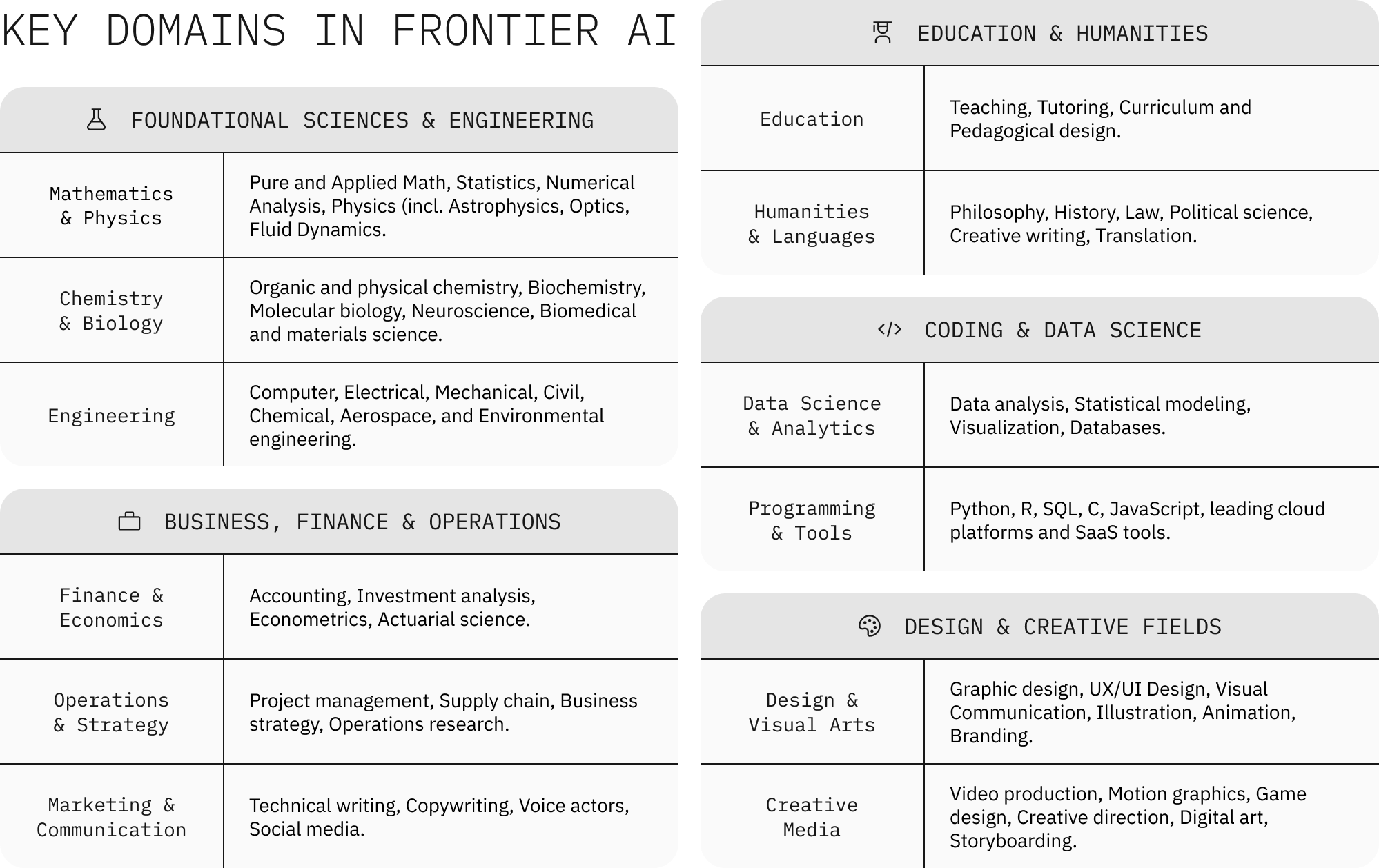

Which disciplines shape frontier AI today?

From coding and agentic tool use to ethics and language, multidisciplinary talent trains smarter models

This highly educated workforce is necessarily diverse, uniting a range of specialists who blend technical skill with professional insight. On one side, software experts deploy their fluency in languages like Python and SQL to precisely evaluate and refine model-generated code. They are complemented by mathematicians and data scientists, who provide the logical and statistical rigor needed for involved quantitative reasoning. At the same time, linguistics specialists ensure models communicate naturally, capturing the subtle nuances of tone and culture that define human interaction.

Equally essential are domain-specific professionals, examples include lawyers, doctors, scientists, and finance experts who assess whether AI responses align with industry standards, regulatory requirements, and practical real-world workflows. Their work is further refined by experts from the humanities, including ethicists and philosophers who help shape how models reason through moral ambiguity. Finally, business and product experts ground this entire process in utility, aligning the model with user needs to ensure its outputs are intuitive and practical.

Together, this convergence of expertise forms the critical infrastructure for a new generation of AI.

How much are these experts earning?

Specialized skills command premium rates, reflecting the critical role they play in shaping AI’s behavior and alignment.

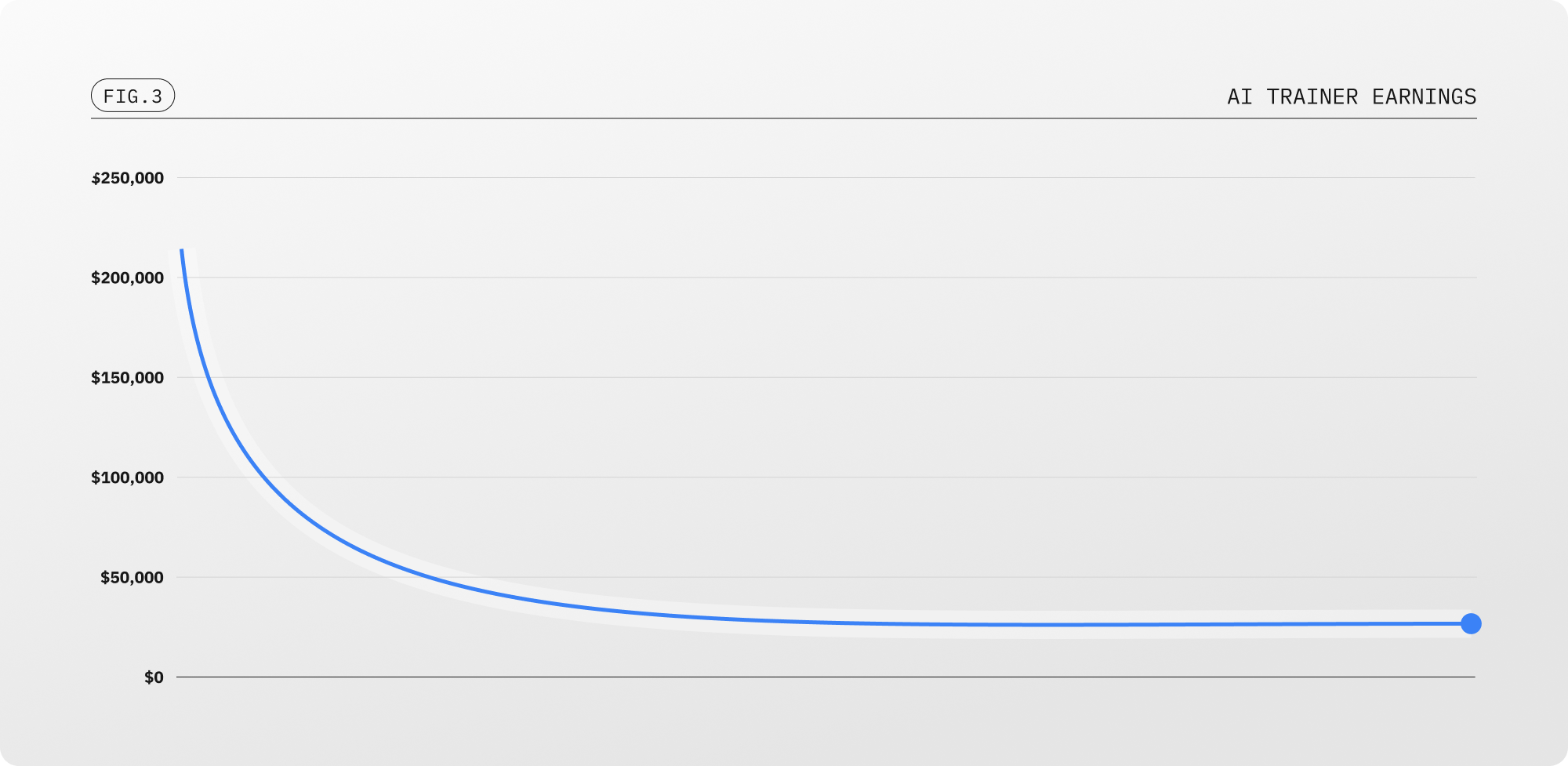

According to internal benchmarks at Labelbox, domain experts now earn between $35 and $125+ per hour, with an average rate of $50-75/hr, depending on the domain, task complexity, and demand. When working full-time, this translates roughly into:

- $35/hour → $70,000/year

- $50/hour → $100,000/year

- $75/hour → $150,000/year

- $110/hour → $220,000/year

We see that roughly 20% of our AI trainers are earning over $90/hour with nearly 10% earning over $120/hour. For comparison, that’s on par with senior consultants, experienced software engineers, and even lawyers. And in many cases, these experts are working part-time, remotely, and on schedules they control.

As AI models continue to advance, their ability to handle abstract and open-ended tasks creates a corresponding demand for more specialized human expertise. Reflecting this trend, we project that over the next 18 months, compensation for elite AI trainers will reach the $150 to $250 per hour range. This evolution will also drive recruitment from traditionally untapped, but increasingly crucial, domains like philosophy, law, and psychology to guide AI on complex ethical and societal reasoning.

What keeps AI trainers engaged and satisfied?

A role that requires thoughtful judgment, offers flexibility, and delivers real impact on AI systems

Our internal survey data and CSAT scores show that AI trainers find their work both intellectually rewarding and personally meaningful. This sense of purpose comes from the novel opportunity to imbue a new generation of AI with their life's work. Instead of simply labeling data, accomplished professionals translate their specialized expertise into a model's core logic. They have the chance to shape their own discipline through a new medium.

In addition to its intellectual appeal, the structure of the work is a significant draw. Experts have the flexibility to contribute remotely and on a schedule they control. This level of autonomy allows seasoned professionals to pursue high-impact AI training alongside their established careers.

What makes producing AI data complex?

The challenge of injecting intelligence and preferences into AI systems

Reinforcement learning is a continuous feedback loop where the need for precision grows as models become more capable and the cost of misalignment rises. Labs now invest heavily to ensure that AI systems behave in ways that reflect human values, intentions, and context.

That often means answering subtle, open-ended questions:

- In the context of medical Q&A, should a model emphasize empathy or accuracy?

- When giving legal advice, should it hedge or take a confident tone?

- In an ambiguous ethical situation, what response feels most “human-aligned”?

- Should an agent prioritize a user’s explicit request, or infer from their broader intent?

These are judgment calls. And today, only humans can answer them reliably.

As such, the expert rater economy has become a strategic asset for labs. It’s not just about model accuracy anymore; it’s about model behavior, and behavior is shaped by the data it sees.

What types of AI data are on the horizon?

An insatiable demand for reinforcement learning in the pursuit of superintelligence

Reinforcement learning (RL), which trains systems to achieve goals through trial and error, is now more accessible than ever through “RL as a service” platforms. This accessibility shifts the primary challenge from deploying the technology to the highly involved task of guiding an agent’s procedural reasoning.

In this context, human experts serve as architects of decision logic—a role far more involved than traditional data labeling. Evaluating whether an agent took the “right” series of actions requires a deep understanding of its goals, the available tools, the context of prior steps, and the necessary tradeoffs. For example, a domain expert assesses:

- Whether an agent prioritized correctly in a multi-objective planning task.

- Whether a software agent took safe, interpretable steps in a deployment environment.

- Whether a language model’s internal reasoning aligns with human expectations of transparency and trustworthiness.

This complexity multiplies in the physical world of robotics and embodied AI, where feedback must account for safety, real-time adaptation, and the consequences of physical actions. Rather than simply evaluating decisions on paper, robotics experts assess the entire system in action—from sensor inputs and motor controls to a robot's ability to recover from unexpected events. Judging whether a robot correctly adjusted its grip on a fragile object or safely navigated an obstacle requires holistic, real-world expertise.

This creates a new frontier not just for AI, but for data itself, placing a premium on capturing the nuanced, procedural judgment that only an increasingly diverse and highly educated class of experts can provide.

Join the frontier AI revolution

Whether you're a domain expert in fields like math, law, engineering, or medicine, or a sharp generalist with strong reasoning skills, you can directly shape the future of AI. This is meaningful, flexible work with compensation that reflects its critical impact.

Become an AI trainer at Labelbox — a role we call an Alignerr — and help us architect the safe and dependable foundation required for the pursuit of superintelligence.

Explore expert roles at Labelbox

Download the full report (in PDF) here

Find the report useful? Share the knowledge with your network

All blog posts

All blog posts