Labelbox•December 12, 2018

How to Scale Training Data

A Guide to Outsourcing Without Compromising Data Quality

In order for data science teams to outsource annotation to a managed workforce provider — also known as a Business Process Outsourcer (BPO) — they must first have the tools and infrastructure to store and manage their training data. Data management tools and infrastructure should support R&D product management teams, outsourced labeling teams, and internal labeling and review teams working together in a single centralized place with fully transparent oversight.

Scaling with Subject Matter Expertise

There is a direct relationship between the volume of your training data and the size of your annotation team. The alternative to scaling your annotation workforce through outsourcing is hiring an internal team of labelers. While this is an expensive option, it is sometimes the only option. For example, scaling sensitive training data, such as medical data with HIPAA protection, might require a solely internal labeling workforce. Continuing with this example, medical data, such as CT scans, would need to be labeled by radiologists who have the necessary medical expertise to properly interpret the data.

The concern with outsourcing labeling work requiring subject matter expertise is that a BPO will not be able to provide specialized labelers. While there is good reason to be skeptical about outsourcing complex or niche datasets, BPOs cover a surprisingly wide spectrum of subject matter expertise and with a little bit of research, you might find one that offers a specialized annotation service capable of labeling your dataset at a fraction of the cost it would take to hire an internal team.

Grant Osborne, Chief Technology Officer at Gamurs, a comprehensive esports community platform powered by AI, describes his decision-making process behind using Labelbox’s outsourcing feature to scale annotations within the competitive gaming industry. Gamurs is developing an AI coach for professional video game players. The AI coach will help to improve gamer performance by learning from similar examples in which players are underperforming and suggest ways to enhance the gamer’s performance.

Grant originally considered crowdsourcing gamers from their large social media following to label their favorite games of choice. At first, he looked into a number of popular crowdsourcing tools but quickly rejected this option because their revenue generation comes from annotation.

“These tools charge for storage based on the number of bounding boxes. And since we will have millions of labels, this pricing structure is impractical.”

He then considered building a cheap in-house tool and hiring an internal team of labelers, until he spoke with Brian Rieger, Co-founder and Chief Operating Officer at Labelbox. Gamurs needed a platform for uploading and regulating images of multiple games with object detection. In contrast to other commercial labeling tools, Labelbox’s pricing structure is based on a three-tier system: Free, Business, and Enterprise. The subscription tiers are categorized by number of ML projects and dataset size. These tiers vary in price and access to certain platform features.

“My favorite part about Labelbox is the ease of the API. Having a developer focused API makes it effortless to productionize models.”

“We needed a machine learning pipeline solution and Labelbox was it!” — Grant Osborne, CTO at GAMURS

Unsurprisingly, Grant was initially dubious about outsourcing specialized gaming actions on Dota2 or League of Legend to a BPO. “We wanted to have an internal labeling team because the computer actions are complicated. How are we going to have an external company used to labeling simple objects, like stop signs and trees, label our games? However, Labelbox’s BPO partners told us to just send over a manual and they’d handle getting a dedicated annotation team up to speed.”

“Labelbox recommended two BPOs that would best fit our needs and said there were more if we were interested. The BPOs estimated that it would take ~3–4 weeks to get everyone completely trained. While this estimation was a bit optimistic for how complicated the material is, they were able to finish the training cycle in ~4–5 weeks.” Despite the drastically different cost quotes from the two BPOs (with one at 1.5–2 cents per bounding box and the other at 10–12 cents per bounding box), Gamurs still decided to use a mixture of both BPOs with a 20 person labeling team from the first and a 10 person labeling team from the second.

“We will probably do a combination of BPOs based on their strengths per game. We will get them to do consensus and if one BPO is better at quality assurance but slower at labeling, we will use them to cross review the other team’s work.”

Scaling with Data Quality

The inverse misconception of outsourcing subject matter expertise is believing that all human labelers are equal when it comes to annotating an extremely simple dataset. This perspective often downplays the importance of data quality in labeling. Read the What’s a Pumpkin? section of AI is More Accessible than you Know to learn how training a deep convolutional object detection model to identify something as simple as a pumpkin is actually much more complex than you might guess. Even with simple labeling tasks, to ensure data quality, you must be able to oversee the consistency and accuracy of labels across annotators and over time.

Labeling at scale without compromising data quality requires transparency throughout your labeling pipeline. Teams of data scientists who are outsourcing on locally run in-house tools often send data to several different annotation services where labeling happens locally, sometimes in a variety of countries, and the data scientists must rely on these labelers to send the file via email or to do uploading acrobatics via Dropbox.

Consequentially, the data becomes fragmented, disorganized, and difficult to manage, leaving it vulnerable to problems in data security, data quality, and data management. In order to monitor labeling accuracy and consistency of outsourcing services in real time, companies, like SomaDetect switch from managing their annotation workforce on a homegrown tool to managing it through Labelbox. Labelbox is best in the world for integrating your in-house labeling and review teams with your outsourcing team in one centralized place.

Not all Labelers are Equal

Factors that differentiate outsourcing goes far beyond only the subject matter expertise it services. Labelbox has hand selected the top BPO firms based on the following criteria:

- Pricing transparency

- Quality customer service

- Diversity in company size, regions of service, range of skills, and styles of engagement

We spoke with Michael Wang, Computer Vision Engineer at Companion Labs, who spoke to us about his experience outsourcing on Labelbox with one of our recommended BPO partners. He explained why outsourcing with a dedicated team of labelers, as opposed to crowdsourcing random human labelers, produces higher quality training data.

“Connecting directly with a dedicated team of outsourced labelers helps you and the clients figure out how to label the project and labelers get better over time. With random labelers, you have to start the learning curve from scratch every time. Dedicated teams of labelers come to understand your project and when you explain something it gets communicated across the entire team.” — Michael Wang

Before choosing Labelbox, Companion Labs had compared Labelbox to a leading competitor by trying out both labeling service APIs in terms of quality metrics, time, and effort to label their project. Michael said that Labelbox has a higher quality outsourcing pool than the well-known competitor who crowdsources.

When asked how he chose who to work with amongst Labelbox’s partner BPOs, he explained that Labelbox provided two recommendations, which he evaluated both on quality and cost metrics. “Both providers were pretty amazing in terms of quality so choosing came down to the cost.”

Outsource on Labelbox

Managed workforce services are often an instrumental part of making an AI project successful. Therefore, we at Labelbox want to enable managed workforce providers to render their services in as frictionless a way as possible. With Labelbox, teams of data scientists, annotators, and product managers can transparently manage small projects and experiments to super large projects all on a single platform. Our focus is to make our customers as successful as they can be at their AI projects. Our customers are businesses of all sizes building and operating AI.

We have worked with a lot of managed workforce providers and it is clear to us that the best providers stand out from the rest in the service they provide and the customer-centric nature of their business. We have hand selected BPO partners so that our customers can have high-quality labeling services rendered directly inside of their Labelbox projects.

On Labelbox your internal and outsourced labelers can seamlessly work together on a labeling project. It’s so cohesive that there’s literally no seam between the two!

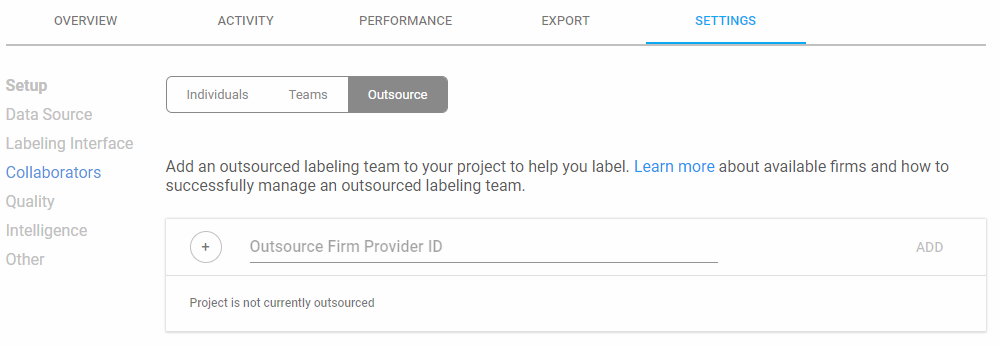

An Effortless Two-step Process

- Contact one of our Workforce Partners listed here.

- Share your Project with them by adding their “Firm Provider ID” (Provided by the Workforce Partner).

That’s really it! Your Project will show up as a shared Project in the Workforce Partner’s Labelbox account where they will be able to add and manage their own labelers in your project. They will have access to annotate, review annotations, and manage their labelers. The best part is that your internal team will be able to monitor their performance with complete transparency. For more information check out our docs.

Get Started with Labelbox

Visit www.labelbox.com to explore Labelbox for free or speak to one of our team members about an enterprise solution for your business.

All blog posts

All blog posts