Labelbox•September 12, 2024

Inside the data factory: How Labelbox produces the highest quality data at scale

In pursuit of AGI and beyond, data quality is not just a checkbox for frontier AI labs—it's the cornerstone of innovation and a critical competitive advantage. The quality of training data determines the success or failure of these cutting-edge models.

We passionately believe that during this decade, data quality will be the most important factor in advancing model capabilities. Very little is publicly discussed when it comes to measuring data quality and producing high-quality human data efficiently, so we wanted to shed light on our approach and share insights into the strategies and practices we use to achieve these standards.

In this post, we look deep inside the Labelbox AI data factory, revealing important tools, techniques and processes that are the bedrock for producing the highest-grade data at scale. We cover just some of the best practices we follow for measuring and managing data quality. We hope this post sparks some valuable insights, while keeping in mind that we utilize many more advanced strategies to operate the entirety of our modern AI data factory.

Measuring quality

Precision and accuracy are two foundational pillars for measuring data quality. Think of these as the dynamic duo for data quality measurement. Let's break them down in a way that's easy to understand:

Precision: Hitting a target consistently

Imagine you're playing darts. Precision is like throwing multiple darts and having them all cluster tightly together on the board. It doesn't matter if they're in the bullseye or not – what matters is that they're close to each other. In the world of AI data, precision means getting consistent results when collecting human opinions or preferences. It's about reliability and repeatability.

For example, if multiple people rate the same AI-generated text, high precision would mean their ratings are very similar to each other. This consistency is crucial because it shows that your data collection process is reliable and produces strong, clear signals.

Accuracy: Hitting the right target

Now, let's go back to our dart game. Accuracy is about hitting the bullseye – or whatever your specific target is. In AI data quality, accuracy means how close your collected data is to the "truth" or the desired outcome.

However, here's where it gets tricky with generative AI: often, there isn't a single clear-cut "right answer." In such cases, the model creator is the ultimate judge in deciding the right answer aligned to their view of the world. That's why we focus on how quickly we can adjust and improve our accuracy over time. It's like learning to aim better with each round of darts you play.

Why Both Matter

In the world of AI data quality, we need both precision and accuracy.

High precision ensures that our data is consistent and reliable. When generating human data for subjective tasks (human preferences, evals, RLHF), the human data factory must produce data with high precision. Every RLHF or evaluation task starts with instructions. Labeling instructions captures a clear point of view from the model creator’s perspective. Instructions also imply that there is a rating criteria and therefore generated data should indicate high precision. Low precision is most likely caused by poor instructions including low coverage of edgecase examples, or poor training, onboarding and execution of labeling projects in production.

Given this context, precision demonstrates a data factory's capability to produce data that has strong signal and consistency.

Good accuracy (or the ability to improve accuracy quickly) ensures that we're collecting our preferred data to train and evaluate our AI models in the way that we believe is most effective and correct. It is also essential to measure the rate of change of accuracy after a few rounds of calibration (feedback).

By measuring and improving both precision and accuracy, we can create a solid foundation for high-quality data – the fuel that powers better, more reliable AI systems. An ideal data factory is highly precise and can quickly calibrate to any desired accuracy level.

In the next two sections, we look at specific ways to measure the precision and accuracy of your data.

Precision metrics

Precision metrics focus on consistency and agreement among labelers, which are primarily derived from Labelbox's built-in consensus capability.Labelbox uses and tests the effectiveness of over 15 similar metrics for a wide range of supported annotations. Here we share some of our preferred metrics currently.

Inter-rater agreement (IRA)

Inter-rater agreement measures how much consensus there is between different raters (labelers) who are assessing the same data. While there are several methods to calculate IRA, such as Cohen's Kappa and Fleiss' Kappa, we'll focus on Krippendorff's Alpha due to its versatility and robustness in AI data labeling contexts.

Krippendorf’s alpha

Krippendorff's Alpha is a popular metric used to assess the agreement among raters because it works well for two or more raters, can handle missing data, and supports nominal, ordinal, and ranking data types. Its values range from -1 to 1, with the following interpretations.

Interpretation

Standard deviation of ratings

Standard deviation measures the dispersion of a set of ratings from their mean (average) value. In the context of AI data quality, it quantifies how much variation or spread exists in the ratings given by different AI trainers for the same item or task.

Interpretation

- Lower values indicate higher precision (more clustered ratings).

- Higher values suggest more disagreement or variability among raters.

- The scale of interpretation depends on the rating scale used.

- It's sensitive to outliers, which might skew the interpretation in small sample sizes.

Percent agreement

Percent agreement is a straightforward measure of inter-rater reliability that calculates the proportion of times different raters agree in their judgments. This is particularly useful in classification tasks (enums).

Interpretation

- Ranges from 0% to 100%, with higher percentages indicating better agreement.

- Generally, values above 75-80% are considered good, but this threshold can vary based on task complexity.

Accuracy metrics

Accuracy metrics assess how close the labelers' responses are to the ground truth. These are primarily derived from Labelbox's benchmark feature.

For preference ranking, selection or side by side evaluation tasks, you often do not have an initial ground truth available. It must be created. One of the best ways to create it is using consensus to pick a winner and then verify with highly trusted humans. Again having high precision is paramount in creating ground truth and ultimately measuring accuracy.

Accuracy score

- What it is: The proportion of correct responses compared to the ground truth.

- How it's calculated: (Number of correct responses / Total number of responses) * 100

- Interpretation: Ranges from 0% to 100%, with higher percentages indicating better accuracy.

- Example: If a labeler correctly classifies 90 out of 100 benchmark tasks, their accuracy score would be 90%.

Mean absolute error (MAE)

- What it is: The average absolute difference between predicted values and actual values.

- How it's calculated: Sum of absolute differences between predictions and actual values, divided by the number of predictions.

- Interpretation: Lower values indicate better accuracy. The scale depends on the range of the values being predicted.

- Example: If labelers are rating the quality of AI-generated text on a scale of 1-10, MAE would show how far off, on average, their ratings are from the ground truth.

F1 score

- What it is: A balanced measure of precision and recall, useful for classification tasks.

- How it's calculated: 2 * ((Precision * Recall) / (Precision + Recall))

- Interpretation: Ranges from 0 to 1, with 1 being the best possible score.

Example: Useful for tasks like sentiment analysis, where both correctly identifying positive sentiments (precision) and not missing any positive sentiments (recall) are important.

Metrics for various annotations

The choice of metric for evaluating data quality depends heavily on the type of annotation task. Different annotation types require different evaluation approaches to accurately assess precision and accuracy. Here are some of the most common annotation types and their corresponding metrics:

Managing quality

The adage "what gets measured gets managed" is particularly relevant in AI data quality. With real-time quality measurements at our disposal, the next challenge becomes how to effectively improve and manage quality. Below are some common scenarios in production that our teams have to intervene and correct.

Scenarios and strategies

Beyond precision and accuracy: Operational efficiency and trust

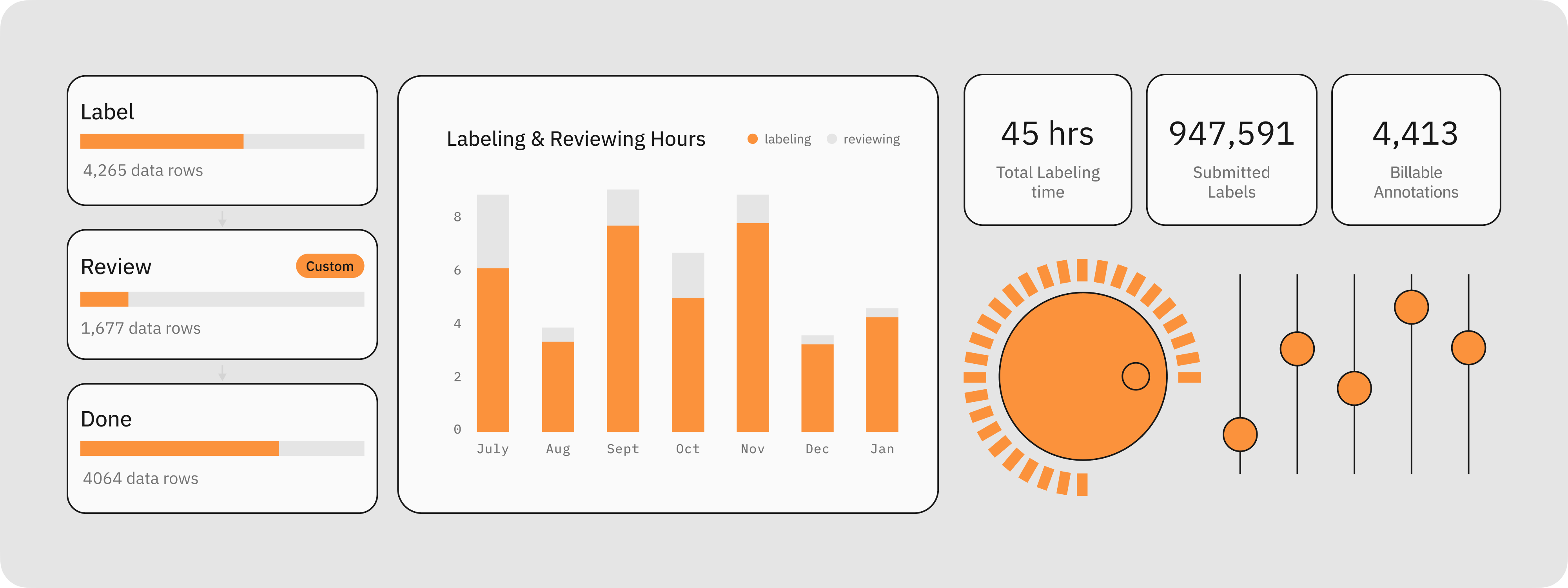

While precision and accuracy are crucial for data quality, it's essential to consider other factors that influence the overall effectiveness and efficiency of AI data labeling processes. These additional indicators provide valuable insights into resource allocation, workflow optimization, and labeler reliability.

Operational efficiency indicators

Achieving high precision and accuracy at any cost is often undesirable, as AI teams typically operate within specific data budgets to achieve expected value in terms of new model capability. The following metrics help balance quality with efficiency:

Notes: Outliers on either side of the mean for these metrics often reveal important insights. For example, consistently fast labelers with high accuracy might be candidates for more complex tasks, while those with long labeling times might need additional training or support.

Alignerr trust score

The Alignerr trust score is a sophisticated metric designed to evaluate and quantify the reliability of individual expert AI trainers (also known as an Alignerr). This multidimensional score incorporates various factors such as historical accuracy, consistency, task completion rate, and the ability to handle complex assignments.

In practice, the Alignerr trust score plays a crucial role in optimizing workflow and maintaining high data quality standards. High-trust AI trainers may be prioritized for more critical or complex tasks, while those with lower scores might receive additional training opportunities or be assigned to tasks with higher levels of oversight. This selective task distribution helps to improve overall data quality without necessarily increasing review overhead. Moreover, the trust score serves as a valuable feedback mechanism, providing AI trainers with insights into their performance while encouraging continuous improvement.

Operational aspects of AI data quality management

Ensuring high-quality data for AI training and evaluation goes beyond metrics and measurements. It requires robust operational processes, innovative technologies, and a skilled workforce. This next section explores key operational aspects that contribute to maintaining and improving data quality.

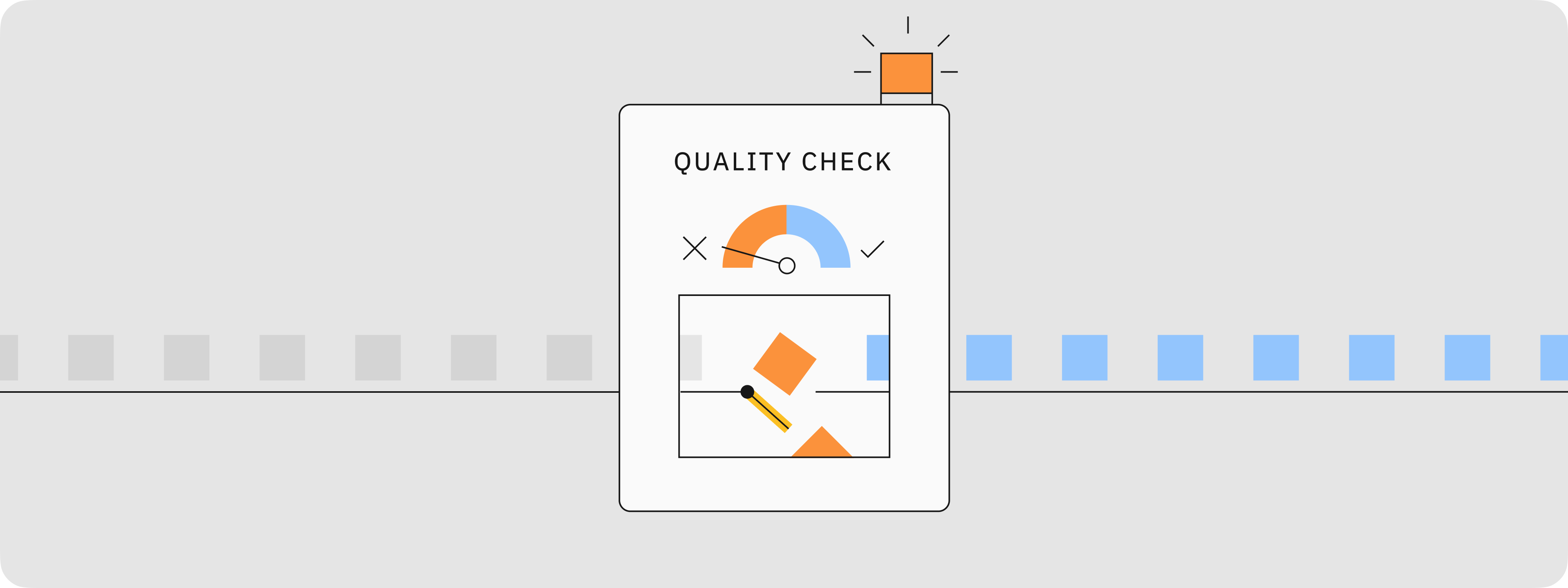

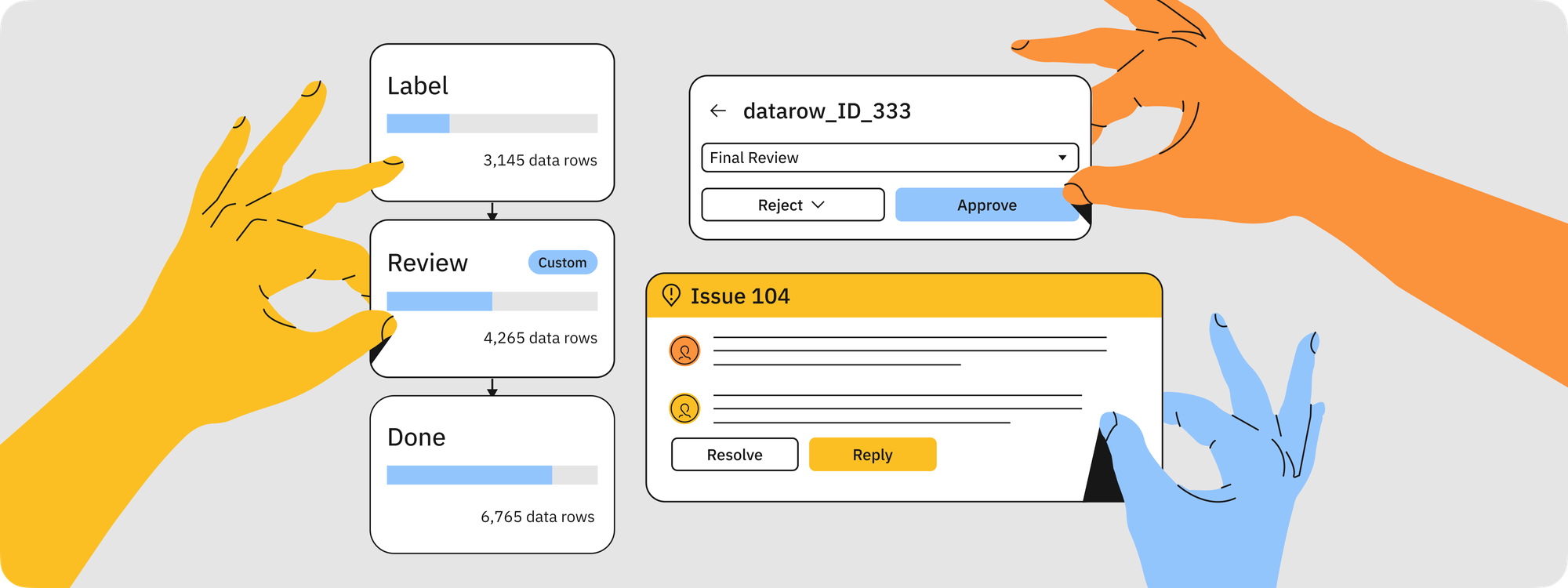

Multi-step review and rework

To enhance data quality, we employ a multi-step review and rework process that draws inspiration from proven scientific methods. One such approach is the double-entry method, commonly used in data entry to reduce errors:

1) Initial labeling: Two independent labelers perform the same task without knowledge of each other's work.

2) Comparison: The results are automatically compared to identify discrepancies.

3) Expert review: Where discrepancies exist, an expert reviewer examines both entries and makes a final determination.

4) Rework: If necessary, the task is sent back for rework with specific feedback.

This process significantly reduces the likelihood of errors and biases, as it requires multiple independent verifications before data is accepted. Additionally, we implement other quality control measures such as:

- Random spot checks by senior AI trainers

- Periodic recalibration sessions to ensure consistency across the team

- Automated checks for logical inconsistencies or outliers

By incorporating these scientific approaches into our workflow, we can consistently produce high-quality data that meets the rigorous standards required for AI training and evaluation.

LLM as a judge

Leveraging the power of Large Language Models (LLMs) can greatly enhance our quality control processes, particularly for text-based tasks. We use fine-tuned LLMs to assess the similarity between annotator-provided explanations and ground truth responses. This approach offers several advantages:

1) Scalability: LLMs can process large volumes of text quickly, allowing for comprehensive quality checks.

2) Consistency: Unlike human reviewers, LLMs apply the same criteria consistently across all evaluations.

3) Semantic understanding: Fine-tuned LLMs can capture nuanced similarities in meaning, even when the exact wording differs.

Our process for using LLMs as judges involves:

1) Fine-tuning an LLM on a dataset of high-quality, expert-verified responses for specific task types.

2) Using the fine-tuned model to generate similarity scores between annotator responses and ground truth.

3) Flagging responses that fall below a certain similarity threshold for human review.

This LLM-assisted approach allows us to efficiently identify potential quality issues while reducing the workload on human reviewers, who can focus their attention on the most challenging cases.

Setting the standard for expert AI trainers

While software and AI technologies are critical for data quality management, the most significant quality gains often come from highly skilled AI trainers (aka human raters). These experts bring nuanced understanding and critical thinking skills that are essential for handling complex generative AI data.

The impact of expert AI trainers is particularly evident in areas requiring deep domain expertise, such as STEM fields, advanced coding, and teaching AI systems complex skills like planning and reasoning. When training AI models to perform complex mathematical proofs, optimize code, or develop advanced problem-solving strategies, human expertise often surpasses current AI capabilities.

With the Alignerr network, Labelbox maintains exceptionally high standards in our recruitment process, with an acceptance rate of just 3%. Our rigorous selection process includes:

1) Initial screening: Looking for advanced degrees in STEM fields, extensive coding experience, or backgrounds in cognitive science and AI development.

2) Skills assessment: Evaluating critical thinking, pattern recognition, and problem-solving abilities crucial for high-quality AI data annotation in complex domains.

3) Expertise tests: Simulating real-world scenarios in STEM problem-solving, code optimization, or designing complex reasoning tasks for AI.

4) Technical interviews: Assessing depth of knowledge in specialty areas and understanding of AI and machine learning concepts.

By investing in top-tier human expertise across crucial domains, we ensure our data quality exceeds what can be achieved through software and AI alone, providing our clients with a competitive edge in developing next-generation AI capabilities.

Curating mission-specific expert AI trainer teams

At Labelbox, we take a unique approach to team formation for each customer project. Rather than assigning available annotators ad hoc, we create and curate dedicated teams specifically tailored to each mission. This approach ensures deep familiarity with the project and fosters a sense of shared purpose among team members.

Key aspects of our team curation process include:

1) Dedicated team formation: We assemble a team of experts whose skills and experience align closely with the project's specific requirements.

2) Minimum hour commitment: Team members are required to dedicate a minimum number of hours to the project. This ensures they gain the necessary context and develop proficiency in the specific task domain.

3) Context building: Through intensive onboarding and ongoing training, we help the team build a comprehensive understanding of the customer's goals, challenges, and quality expectations.

4) Mission-specific motivation: We cultivate a shared sense of purpose within the team, aligning their efforts with the project's broader objectives and potential impact.

5) Continuous improvement: Regular feedback sessions and performance reviews help the team refine their approach and continuously enhance their skills.

This dedicated team model not only leads to higher quality outputs but also results in increased efficiency over time as the team develops deep expertise in the customer's specific domain. By creating a focused, motivated team with a strong grasp of the project's context, we ensure that each customer receives the highest level of service and the best possible results for their AI initiatives.

Bringing it all together

In this post, we explored some of the key ways that Labelbox helps customers capitalize on an AI data factory and our approach to delivering high-quality data at scale. This strategy includes:

1) Precision and accuracy metrics tailored to various annotation types

2) Adaptive quality management strategies for different scenarios

3) Operational efficiency indicators to balance quality with cost-effectiveness

4) The Alignerr trust score for optimizing workflow and maintaining high standards

5) Multi-step review processes and LLM-assisted quality control

6) A rigorous selection process for expert AI trainers

7) Curated, mission-specific teams dedicated to each customer's unique needs

What sets Labelbox apart is the scientific approach to data quality and operating an AI data factory at scale. Just last month, over 50 million annotations were created with over 200,000 human hours. By continuously monitoring and analyzing data quality as it's produced, we enable immediate interventions and adjustments. This real-time approach allows AI teams to:

- Quickly identify and address quality issues before they compound

- Provide instant feedback to AI trainers, fostering rapid improvement

- Adapt to changing project requirements on the fly

- Ensure consistent, high-quality outputs throughout the entire data production process

Our confidence in this system is so strong that we offer something truly unique in the industry: a data quality guarantee. Customers only pay for data that meets the agreed-upon Service Level Agreement (SLA) for quality, throughput, and efficiency. This guarantee underscores our commitment to delivering not just data, but value and results for our customers.

Data as the bedrock for AGI and beyond

As the AI landscape evolves and frontier labs continue their rapid pace of innovation to drive us closer towards AGI, Labelbox remains committed to providing the highest quality data and most efficient services, ensuring that data quality never becomes the limiting factor in the pursuit of transformative AI technologies. We’re continuing to invest heavily in cutting-edge data science and alignment techniques, pushing the boundaries of what's possible in data quality and service performance.

We hope you found this post helpful for gaining a deeper understanding of how a data factory helps ensure data quality and accelerate AI development process. If you're interested in learning more, feel free to sign up for a free Labelbox account to try out the platform, or contact our team to learn more.

References

We’ve compiled a list of articles and research papers that have influenced our approach.

3) Survey of agreement between raters for nominal data using krippendorff's Alpha

4) Assessing Data Quality of Annotations with Krippendorff Alpha For Applications in Computer Vision

All blog posts

All blog posts