Labelbox•November 21, 2024

Multi-step reasoning: Teach LLMs to think critically

In today's rapidly evolving AI landscape, Large Language Models (LLMs) are becoming increasingly sophisticated. To further expand the potential of LLMs, they must be trained to not only provide increasingly complex answers, but to also demonstrate the thought process behind those answers.

Complex reasoning capabilities are crucial for LLMs to reach a state where they can assist in solving a wide range of complex and agentic tasks. But, there's a catch.

Traditional LLM training often focuses on optimizing for accuracy and fluency. While this is essential, it doesn't necessarily equip LLMs with the ability to break down complex problems into smaller, actionable steps that you can follow. This limitation hinders their ability to provide clear, concise, and executable instructions.

To address this challenge, we're excited to introduce a powerful new annotation type in our multimodal chat solution, "Message step tasks." With multi-step reasoning, you can now train LLMs to perform advanced reasoning tasks and provide high quality step-by-step instructions to ultimately build more powerful and differentiated models.

Refine data quality and model performance

Labelbox's multi-step reasoning feature revolutionizes LLM training by automating the breakdown of complex responses into smaller, manageable steps that can then be individually evaluated, scored, and, when needed, rewritten.

During a model evaluation project that has an ontology configured with the new multi-step reasoning annotation type, responses are automatically broken into individual steps. Raters are asked to evaluate the accuracy of each step by identifying them as either correct, neutral, or incorrect. For incorrect steps, they are prompted to rewrite the response, input a justification, and then can choose to automatically regenerate the rest of the conversation after that incorrect step.

The new feature is designed to help in two key areas of LLM development:

- Producing high-quality training data: Obtain highly accurate datasets by checking, rating, and rewriting (if necessary) each step of a response.

- Building differentiated, more performant models: Create specialized models tailored to your specific use case using custom datasets and advanced training techniques.

How does multi-step reasoning work in Labelbox?

With the addition of multi-step reasoning to Labelbox’s multimodal chat editor, you can now easily rate each part of a model’s response in order to improve its understanding and generate more accurate outputs–a critical first step on the path to agentic reasoning. Here’s how to use this new feature in the Labelbox platform.

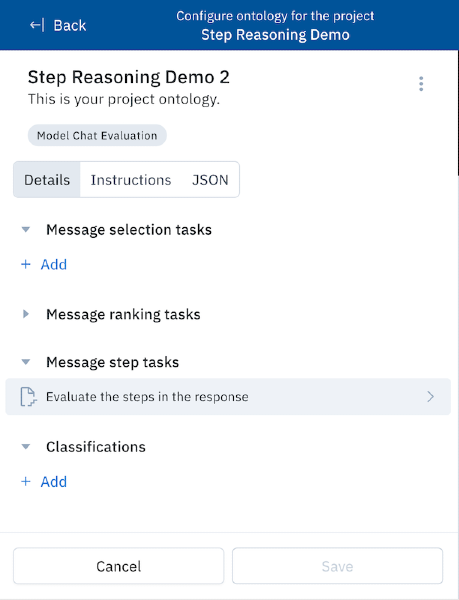

First, create a new project using the Multimodal chat task type, and then select or configure an ontology with a “Message step task” entered and saved. Other per-message, global classifications, and tasks can also be configured and used together with the new step reasoning tasks.

After configuring the project and ontology, we follow these steps to use:

- After choosing a model(s) to evaluate and clicking Start labeling, enter a prompt to generate a model response.

- Once the response is generated, click on the step reasoning task on the lefthand side of the screen if it is not already selected. Our step reasoning task (as seen in the image below) is called “Evaluate the steps in the response.” The multimodal chat editor will automatically split responses into individual steps and allow you to classify them as incorrect, neutral, or correct.

- Evaluate and rate each step individually. Inaccurate responses can be marked as “Incorrect” and the rater is prompted to rewrite the incorrect step, evaluate some alternative steps suggested by the model, and provide a justification for the rewritten step.

- After submitting a rewritten response, if the option to automatically regenerate the remaining steps was selected during the ontology configuration, then the remaining steps are regenerated to take into account the new step.

- Iterate through this process until all steps are covered. This simple, yet powerful process will deliver highly accurate and detailed model responses.

Interested in exploring the tool through a quick, interactive demo? Explore a quick 60-second product tour to learn how to get started with our multi-step reasoning tool without having to sign-in or set-up anything.

The outcome: Enhanced cognitive abilities in LLMs

This ability to perform granular evaluation and feedback on each step enables LLMs to develop crucial cognitive capabilities around problem-solving, decision making, and nuanced understanding. With multi-step reasoning, you can now train your models on a variety of advanced tasks, including:

- Complex reasoning: Easily break down intricate reasoning processes into smaller, manageable steps.

- Chain-of-thought: Directly analyze and evaluate each step in a chain of reasoning to improve model performance.

- Agentic capabilities: Strengthen models' ability to perform tasks independently by training them on step-by-step breakdowns of complex actions.

Improve your AI models with Labelbox

Labelbox’s commitment to pushing the boundaries of innovation is evident in these powerful feature advancements to our multimodal chat editor. By actively listening to user feedback and refining our platform to push the boundaries of AI development, Labelbox empowers organizations to unleash the full potential of their AI initiatives.

Enjoy these two easy (and free) ways to explore these new features:

- Try it yourself: Explore LLM training with step-by-step evaluation using Labelbox's multi-step reasoning feature.

- Explore an interactive demo: Take a guided, interactive tour and explore using the multi-step reasoning feature in action.

Contact our team anytime with questions or if you are ready to discuss your LLM training needs and how Labelbox might be able to help.

All blog posts

All blog posts