Labelbox•March 28, 2025

How to train and evaluate AI agents and trajectories with Labelbox

As AI agents become mainstream in 2025, the ecosystem of supporting tools has expanded rapidly. Yet, one of the biggest hurdles remains—gathering and refining the right data to train and evaluate agents effectively. At Labelbox, we’re addressing this challenge head-on with new, agent-specific capabilities built into our Multimodal Chat Editor, making it easier than ever to create high-quality training and evaluation datasets.

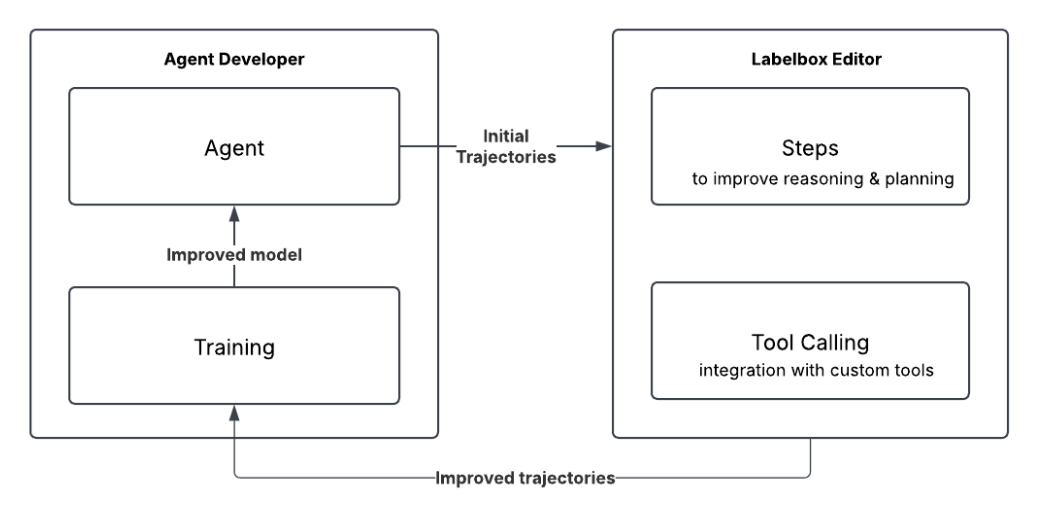

An agent follows a sequence of steps, known as a trajectory, to accomplish its goal. This trajectory includes reasoning steps, tool calls, and observations. Now within the Labelbox Platform, you can create, edit, and annotate trajectories, providing a robust framework for evaluating and refining AI agents.

In this blog, we explain how to use the Labelbox Multimodal Chat Editor to perform two key tasks essential for building agents: (1) agent training, and (2) agent evaluation. Read on to learn how to do these with Labelbox.

Agent training

Despite advancements in LLMs, agents still struggle to perform the correct sequence of steps for many tasks that humans handle effortlessly. Human labelers can help train agents to operate more effectively and efficiently by identifying issues with existing trajectories and by recommending a better sequence of steps. They can also test how the agent performs across a diverse set of scenarios to ensure that the agent behaves as expected for a wide range of inputs.

The resulting data on how to improve the trajectories can then be leveraged to enhance the agent using one of the following approaches:

- Prompt optimization: LLM prompts are refined manually or using automated prompt optimization methods such as MIPRO or TextGrad.

- Model fine-tuning: The base LLM backing the agent is trained using trajectories to improve the steps it comes up with.

To demonstrate how Labelbox creates training data to improve an agent, lets walk through an example of building a simple research agent. This agent will retrieve information from the web and generate a summarized report. We will then update the initial trajectories and use them to optimize the agent’s prompt.

Create an agent

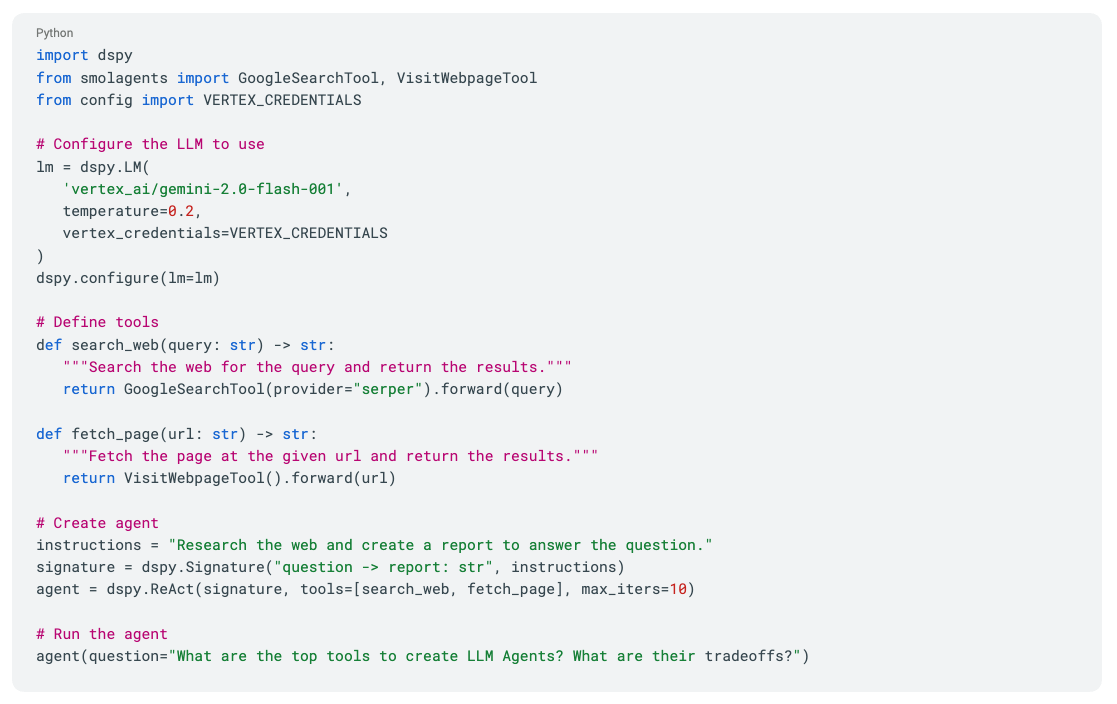

We will use the DSPy package to build the agent. The agent will use the ReAct framework for reasoning and action execution. It will have access to two key tools from the smolagents package:

- Search web – Performs a Google search and returns relevant results

- Fetch page – Retrieves the content of a web page in Markdown format

Enhance agent trajectory

The agent is then integrated into Labelbox using a custom model integration and selected during the configuration of the Multimodal Chat project. To enable labelling trajectories, enable the following option in advanced project settings:

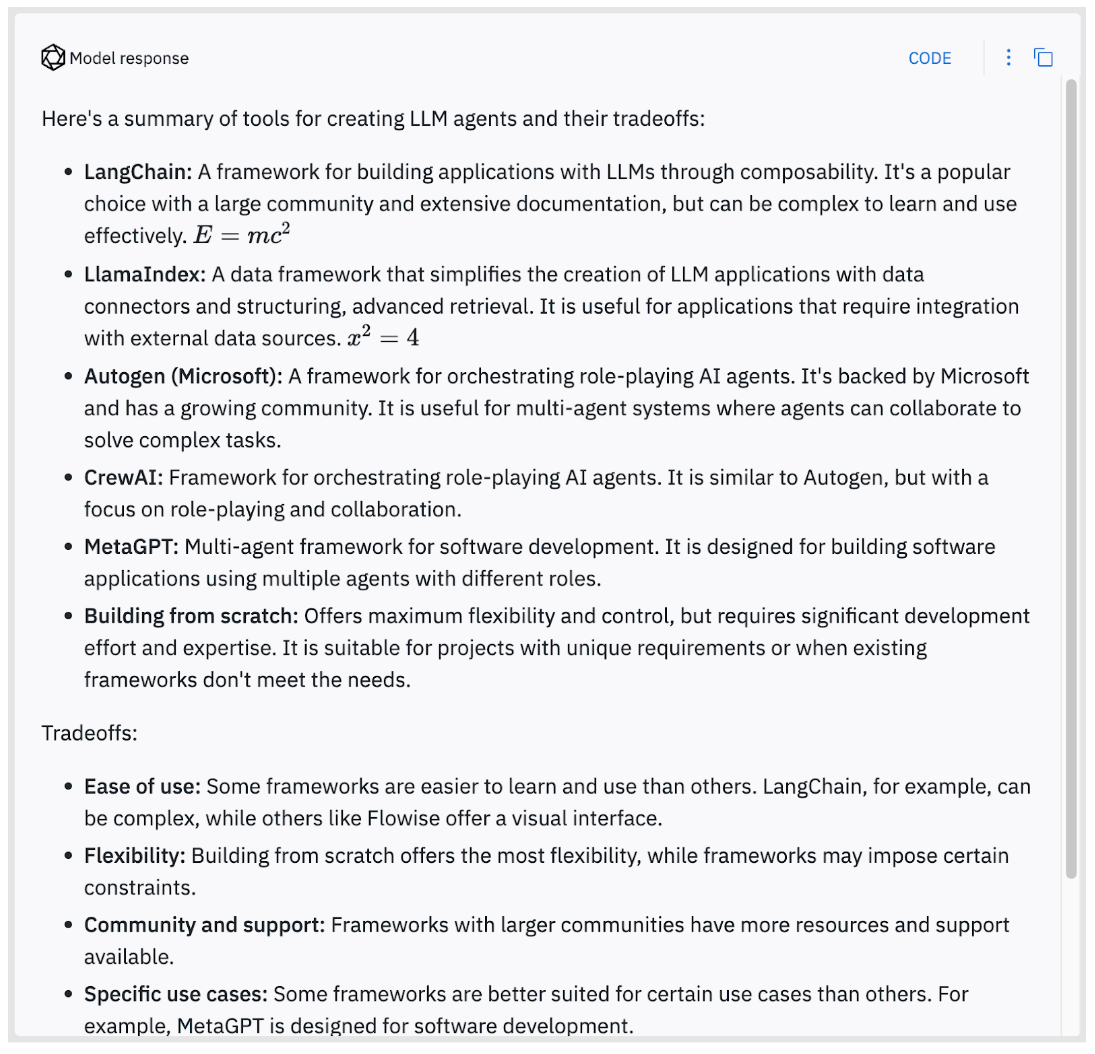

Running the agent produces the following initial trajectory:

Here, we see the agent’s step-by-step process, including its reasoning and tool calls for searching the web and retrieving pages. Each step can be edited to improve the agent’s performance—whether refining its reasoning, optimizing tool usage, or enhancing the final response.

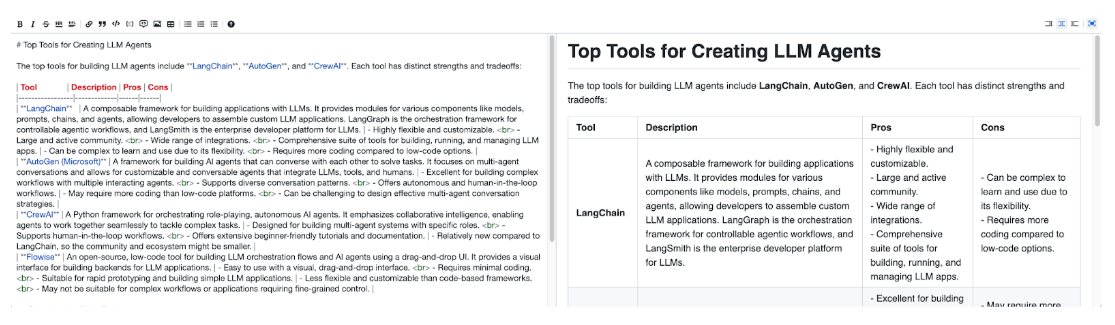

The agent’s report is presented as bullet points; however, we want a more structured output with a heading and a table of trade-offs. By clicking the edit button, we can refine and customize the report to better suit our needs.

In the edit view, we use Markdown to apply the desired formatting. Below is the updated report, now displaying information in a table.

We repeat this process to generate 10 trajectories with improved report formatting.

Optimize agent

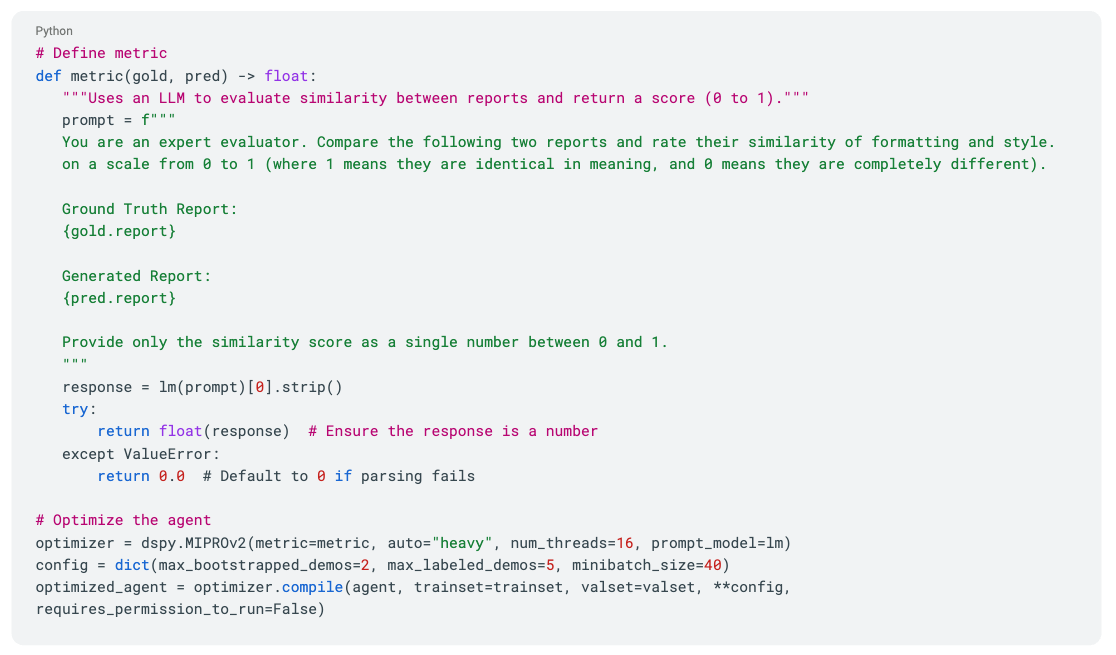

Next, we will use the improved trajectories to optimize the agent’s prompt. The trajectory data is split into a training and validation set. We use the MIPRO optimizer implemented in the dspy library to refine the prompt.

To optimize the prompt, we first define a metric function that evaluates each update. Our focus is on report formatting and style. We use an LLM-as-a-Judge to compare the agent’s output with labeled data and assess similarity.

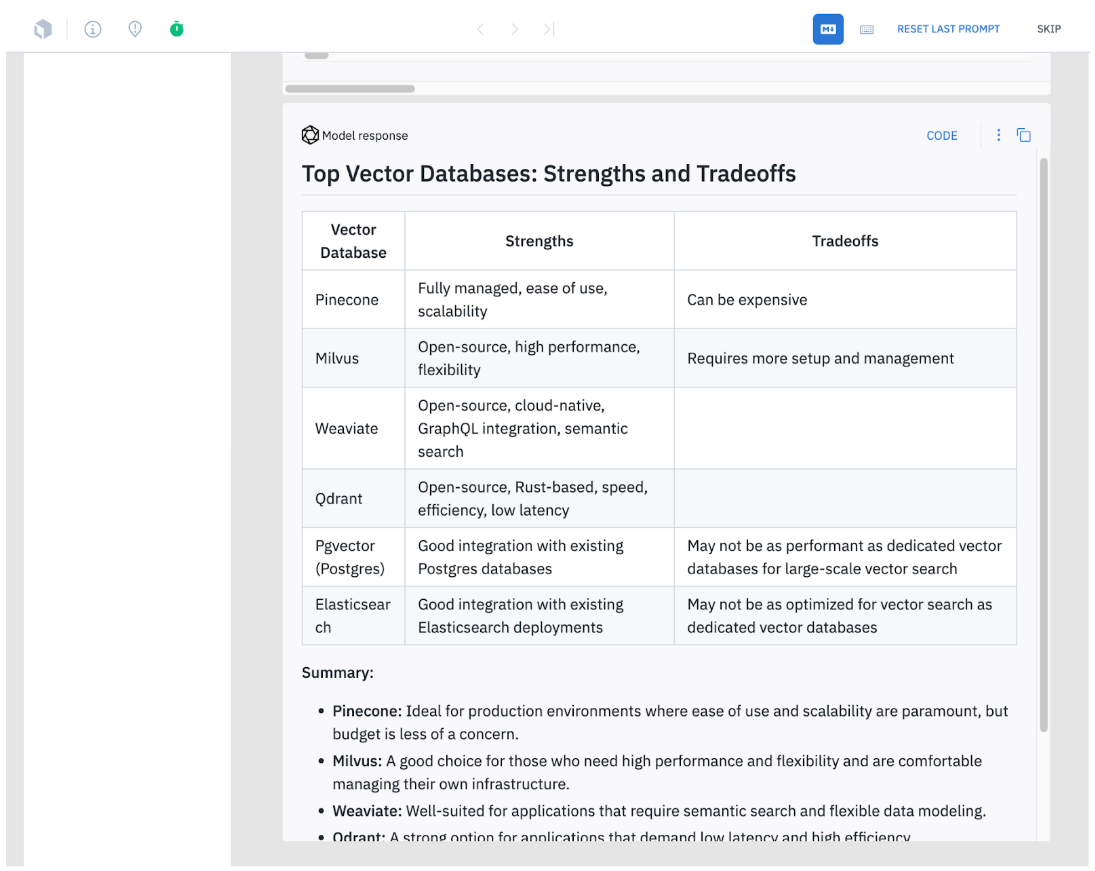

Next we test the optimized agent on a different prompt, we see that the output now produces a table similar to our training examples.

Agent evaluation

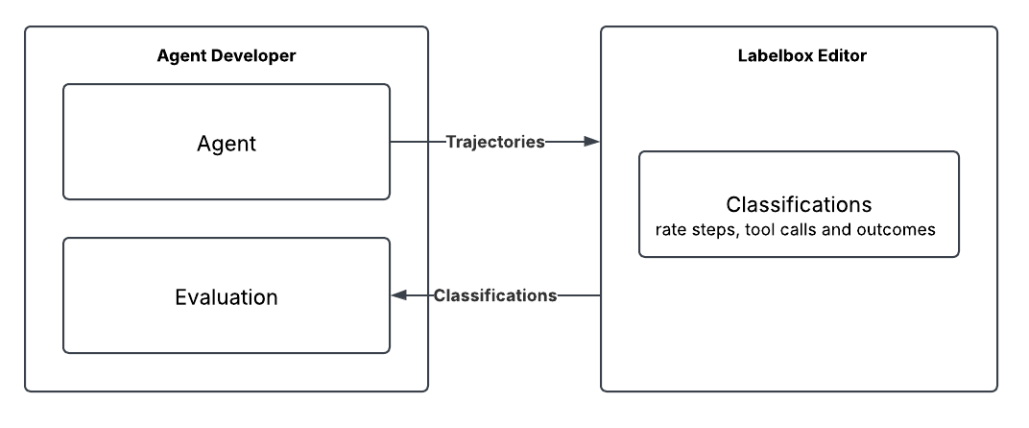

Now that we’ve looked at agent training, let’s explore how Labelbox can play a key role in agent evaluation. Human evaluation is essential for measuring an agent’s performance. With Labelbox, we provide feedback not only on the overall outcome but also for specific steps. This supports both development and production phases:

- Development: Evaluates whether code or data changes improve performance and by how much, guiding release decisions

- Production: Monitors live agents to ensure quality, detect issues, and enable timely interventions

The Labelbox editor includes customizable classification features to evaluate agent trajectories. It supports both global ratings for entire trajectories and granular, message-level classifications for specific steps. Similar to other Labelbox editors, these classifications are highly customizable to meet the goals for your agent.

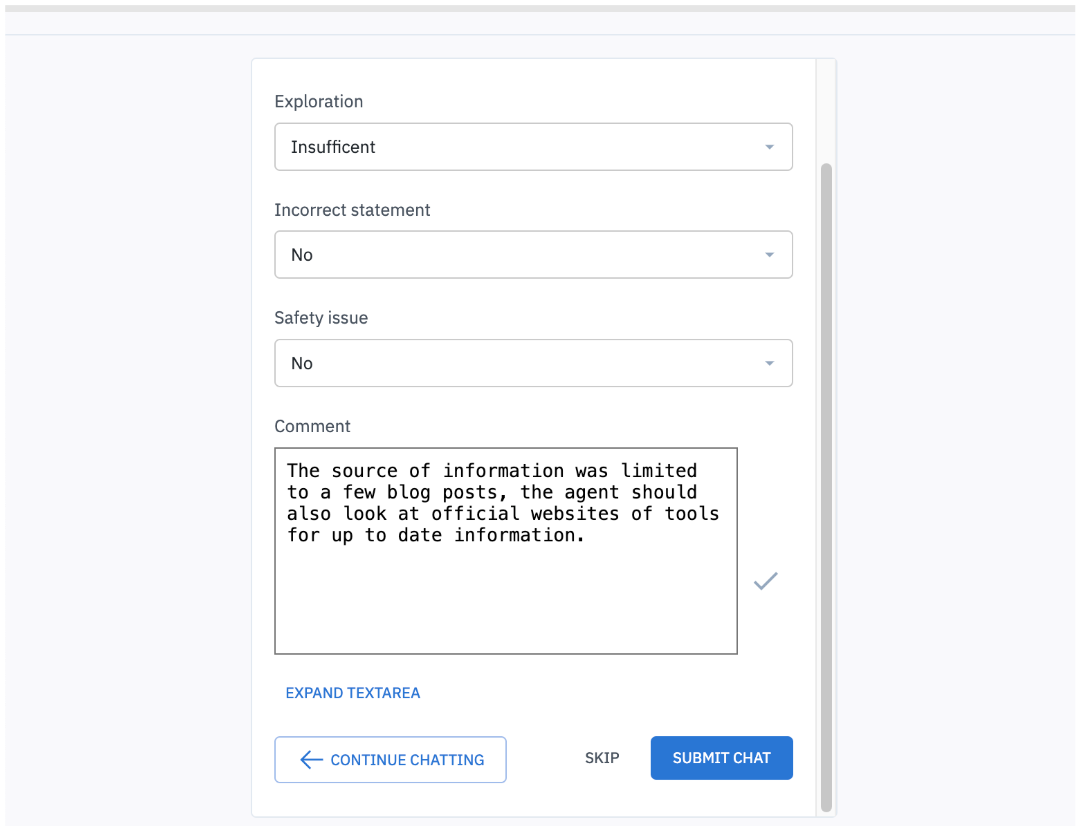

For instance, to evaluate our research agent, we can add global classifications capture information such as:

- Exploration: Did the research agent explore enough resources to answer the question asked?

- Incorrect statement: Does the report have any incorrect information?

- Safety issue: Does the report have any misuse, toxicity or fairness issues?

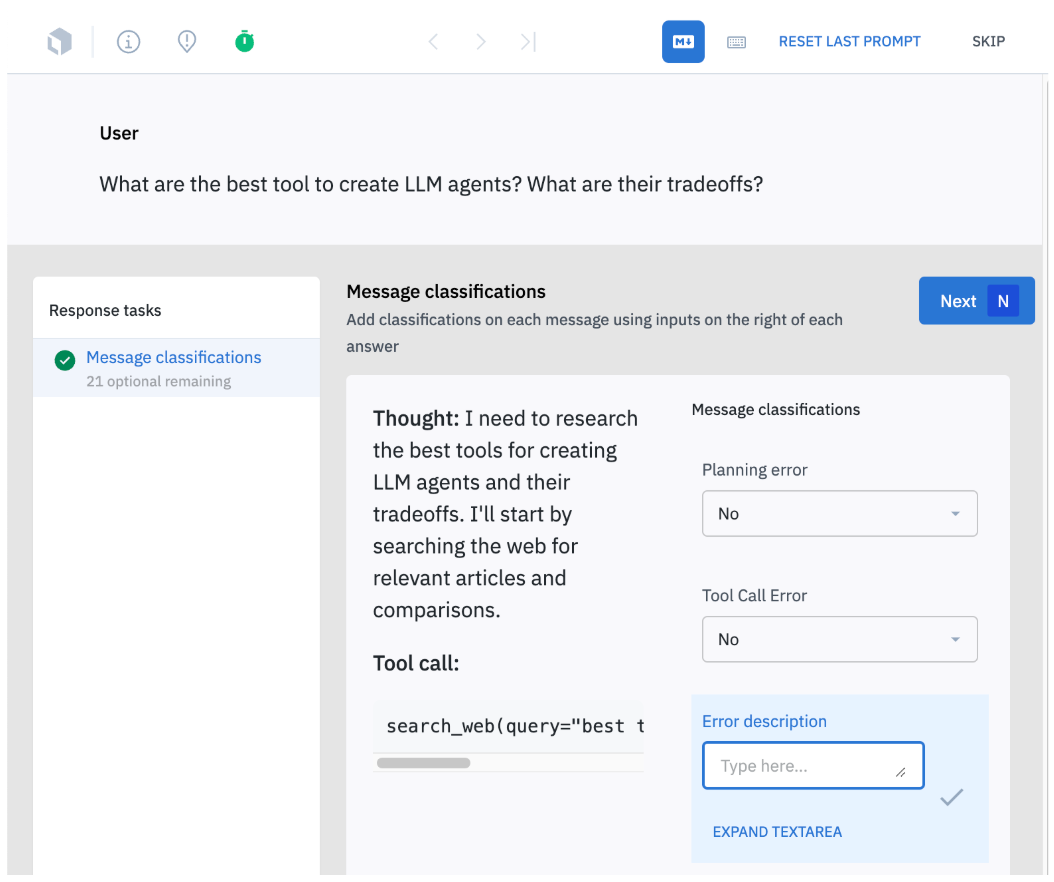

We can also add message-level classifications such as:

- Planning error: Did the agent make a mistake in planning?

- Tool call error: Was the right tool used with the correct arguments?

Conclusion

With Labelbox’s addition of a powerful suite of tools for evaluating and training agents, you can streamline the process of creating more effective and reliable AI models. By creating, editing, and annotating agent trajectories, you can improve how you optimize agent prompts, fine-tune LLMs, and gather human feedback.

We encourage you to explore the potential of Labelbox's trajectory labelling capability and discover how it can enhance your agent development process. Your feedback is invaluable as we continue to refine and expand our platform's capabilities.

Sign-up and try out the new functionality today and share your thoughts—together, we can shape the future of intelligent agents.

All blog posts

All blog posts