Labelbox•March 12, 2021

Accelerate your AI into production with Labelbox

Last week, Labelbox ML engineers John Vega and Chris Amata led a webcast that detailed some of the basic functionalities of our training data platform, including annotation tools, quality management features, and model assisted labeling.

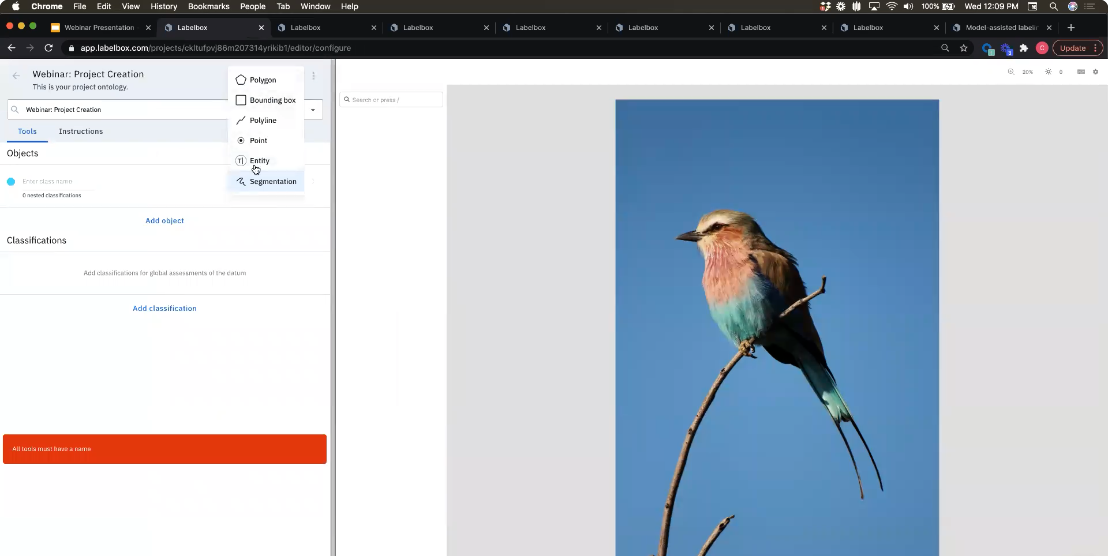

Annotate

Webcast attendees learned how to navigate the image, tiled image, video, and text annotation tools, as well as:

- Add bounding boxes

- Answer relevant questions about an image

- Add team members, both within the organization and on a project-by-project basis

- Add an external labeling team to a project

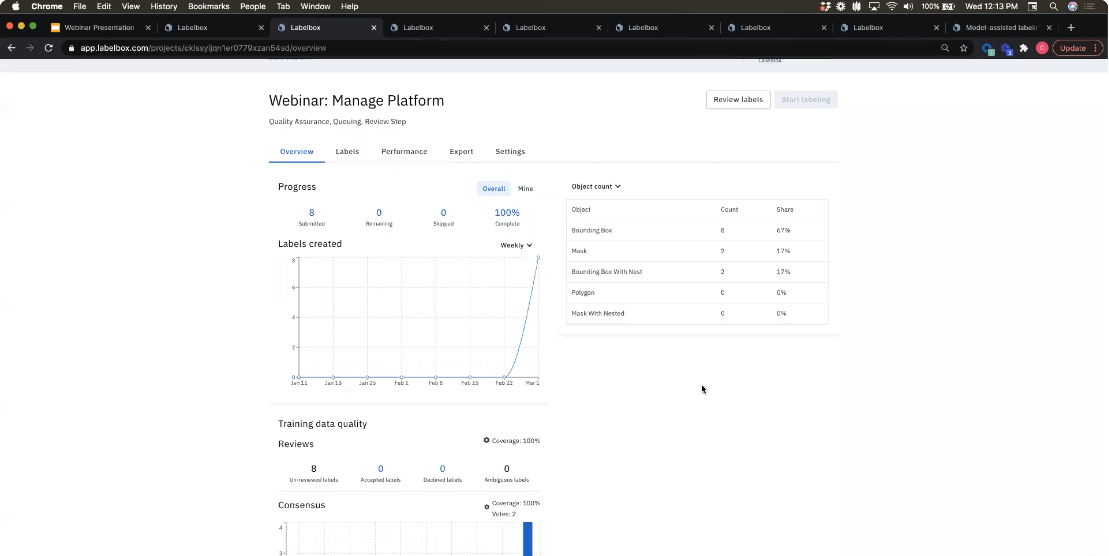

Manage

The webcast also covered how you can monitor the progress of any labeling project with metrics such as:

- The number of submitted, remaining, and skipped labels

- A breakdown of the objects captured within the data

- An overview of high and low confidence for the model

- Performance metrics for individual labelers

Attendees also learned how to seamlessly integrate the Benchmark (setting a gold standard to which labelers will be held) and Consensus (taking the average of how several labelers annotated the same image to find errors) quality management tools into the labeling workflow.

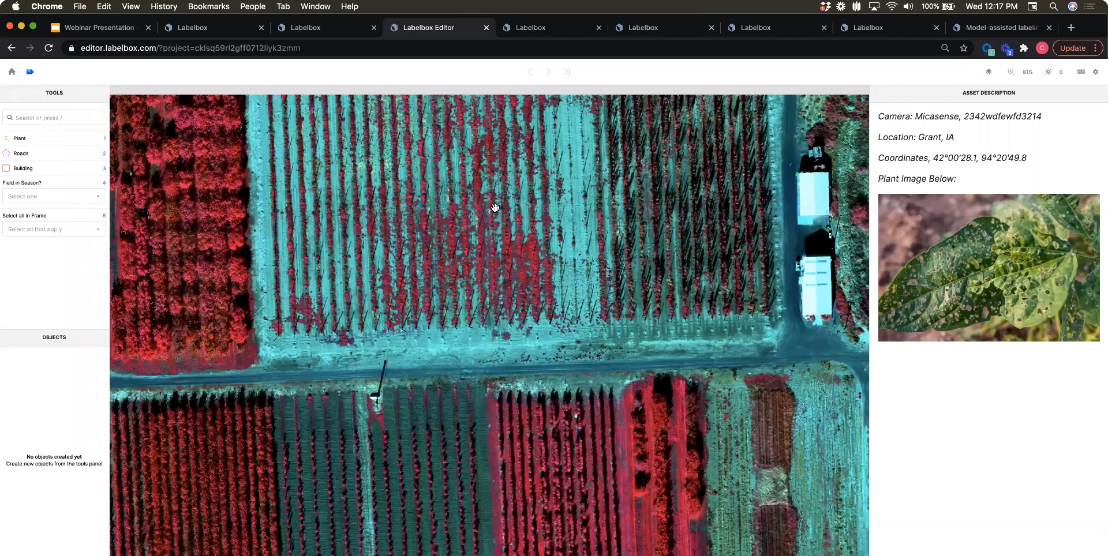

Iterate

Finally, the webcast covered features designed to help teams iterate on labeling projects as well as accelerate iteration on the model. Attendees learned how to add metadata within the image editor, including multiple image overlays and textual context on the sidebars. These metadata can make it easier to label images quickly and accurately.

Watch the webcast recording to learn more about these functionalities.

All blog posts

All blog posts