Labelbox•October 6, 2020

Customizing an optimal training data workflow using an API-first approach

Cape Analytics is a leading provider of AI-powered geospatial property data. The company uses deep learning and geospatial imagery to provide instant property intelligence for buildings across the United States. Their pioneering work for assessing risk and property value is tied to common property factors (pools, roof construction, solar panels) but also emerging risks like vegetation coverage, detailed roof condition, and wildfire zones. Creating performant machine learning models to extract intelligence from properties requires enormous amounts of high quality, labeled data. To accomplish this feat, Cape’s engineering and data science team searched extensively for ways to build a complete and automated training data solution.

Part of this complete solution included automating the data import and labeled data export process, which saved the Cape team valuable time not spent handling data transfer methods manually. By connecting their data via API, Cape’s labeled data possessed all the metadata of how a specific dataset was created, labeled, as well as labeler feedback. This made the process much easier to manage and track as the complexity of their projects grew over time.

An API-first approach also offered the Cape team more control in comparison to other training data platforms. Cape Analytics’ engineering team programmatically customized Labelbox’s GraphQL API experience so that they could interrupt the cycle within the training data process and grab data out, if needed, to fit seamlessly with how their ML workflow was structured.

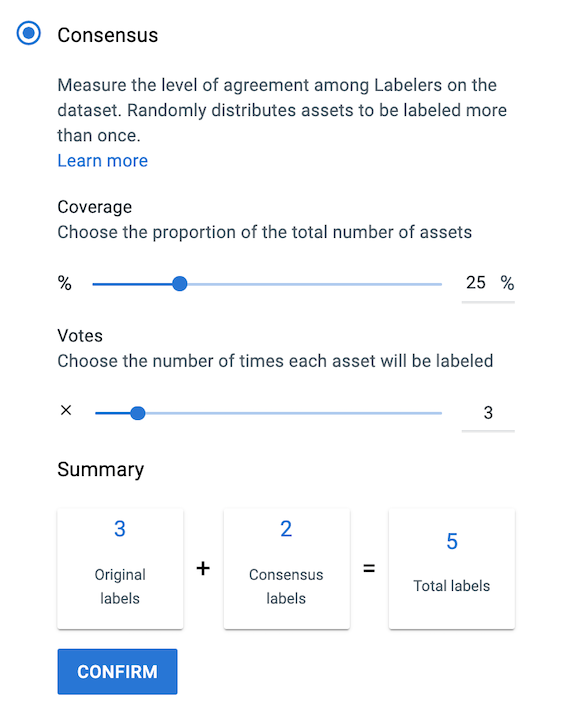

Cape’s engineering team also regularly updated labeler evaluation models, as well as fine tuned how their Consensus voting systems worked. Consensus is a Labelbox quality assurance (QA) tool that compares a single label on an asset to all of the other labels for that asset, in order to ensure consistency. The Cape team collected multiple votes for a particular annotated object (e.g. a residential property that showed roof covering material) and customized the functionality via Labelbox API so they would be able to call the data back into their analytics systems, and then apply their own algorithms on whether or not they were satisfied with the quality collection of votes. Afterwards, if they wanted to dynamically ask for more votes, they would simply call the API and inject votes in between.

Cape Analytics wanted a system that provided flexibility to integrate into their existing data collection, storage, and management systems. Labelbox’s API complemented their existing processes because it allowed Cape’s data science and engineering teams to focus on delivering AI products, as opposed to the hassle of managing their own training data infrastructure. For comparison, Cape’s previous homegrown tools lacked this type of functionality and relied more on manual and labor intensive workflows. To put all these time savings into perspective, Labelbox took what used to take several days (in order to set up a project and an additional day to fetch the data) and turned it into a simple 10 minute process.

All blog posts

All blog posts