Labelbox•January 28, 2022

January updates

ML teams need powerful, intuitive tools to help them identify trends in model performance and uncover edge cases. We’ve made improvements to Model Diagnostics over the past month that give users access to more insightful comparison metrics and support for additional data types. We’ve also expanded model-assisted labeling (MAL) to support video bounding boxes, and are excited to offer MAL to all paid customers.

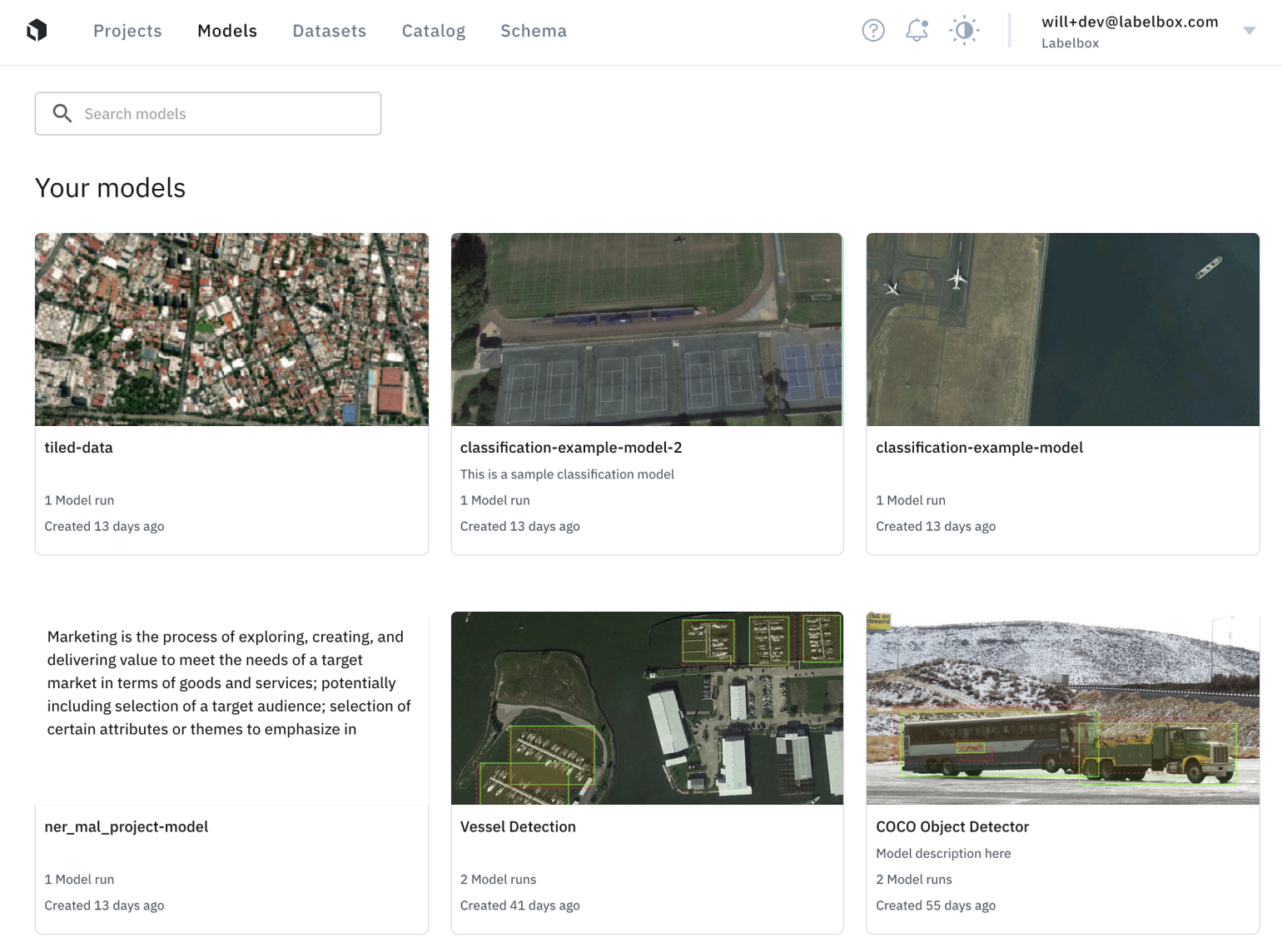

Track and compare changes in performance across iterations

We’ve introduced the model gallery so you can more easily see all your work in one place. Gallery view shows you when your model was first created as well as how many model runs each model has.

Within an individual model, you can now compare model performance from one model run to the next so you can see where you’ve made the most meaningful improvements. In the example below, you can see how IoU improved for most classes between version 0.0.0 and 0.0.1.

To inspect changes in performance at the asset level, you can now see multiple iterations of model prediction overlaid on a single asset. This lets you see how a new iteration of your model performed in more detail so you can make more informed decisions about the type of data you need to prioritize for labeling to train your next iteration. Here you can see an example of a demo model that erroneously identified the laptop as a cat in version 0.0.0 before correctly identifying it as a laptop in version 0.0.1.

With these updates, you can get much more powerful insight into the performance of your model, helping you quantify and visualize improvements from one iteration to the next.

Use Model Diagnostics with more data types

We’ve expanded Model Diagnostics to now support text classification and tiled imagery. Compare model predictions to ground truth data, view detailed performance metrics, and identify weak spots and outliers in your data so you can guide your next iteration.

Expanding MAL to paid customers and video

Our Model-assisted labeling (MAL) workflow allows you to import computer-generated predictions and load them as pre-labels on an asset.

MAL can make data labeling more effective by reducing overall labeling time and driving down human labeling costs by 50-70%.

MAL for all paid Labelbox customers

- We’re excited to announce that MAL is now available for all paid customers of Labelbox.

- Learn more about model-assisted labeling use cases and benefits in our upcoming ‘ML Unboxed’ webinar on February 23rd. Stay tuned to register!

MAL for video

- We now provide MAL support for bounding boxes in our Video Editor.

- Users can now import computer-generated predictions as bounding box pre-labels for videos.

Read more about MAL supported data and annotation types in our documentation.

Manage webhooks through the UI

Webhooks let you orchestrate powerful workflows that respond to real time events in Labelbox. With the release of the webhooks UI you can quickly view, create, and deactivate webhooks all through the app, actions that used to only be possible through the SDK. To try out this new feature navigate to your account settings page. To learn more about our webhooks check out or docs.

Editor Updates

Label and preserve valuable insights with native PDF support

Open Beta - all plans

- You can now natively upload your PDFs to save time and preserve data integrity.

- Create bounding boxes around key characters, concepts, or page sections for optical character recognition (OCR).

Apply segmentation masks to video files

Open Beta - Pro plan

- Use our video segmentation tool to train your models to recognize objects or concepts with an organic shape.

- Intuitively and accurately segment individual high-value frames, or the best example of an object or concept in a video.

Natively upload and annotate your 3D DICOM data

Sign up for our Closed Beta

- Natively import and view DICOM data in 3D space (with axial, sagittal, coronal views).

- Create precise segmentation masks with orthogonal projections via our free-hand pen tool.

All blog posts

All blog posts