Labelbox•October 29, 2020

How Labelbox tackles technical challenges in distributed systems

Last week, our team had the pleasure of appearing on the Software Engineering Daily podcast. Among several topics, CEO Manu Sharma shared his insights on how machine learning teams face complex challenges when it comes to labeling collaboration and quality control — and how Labelbox’s technology and training data platform presents an efficient solution to both. Here are a few highlights from the conversation.

Collaboration

Just like coding, building a machine learning model involves many people, all working together in pursuit of solving a problem — or creating an application that solves a problem. Machine learning requires domain experts, engineers, program and product managers, and labelers to collaborate on one project.

These roles might be dispersed and labelers are frequently outsourced, so building a system where everyone can do their work and collaborate securely is one of the biggest challenges for ML teams. Before Labelbox, one common band-aid solution was distributing parts of a dataset to labelers via a blend of USB sticks, cloud storage links, and shared spreadsheets, which was neither efficient nor secure.

Ensuring label accuracy

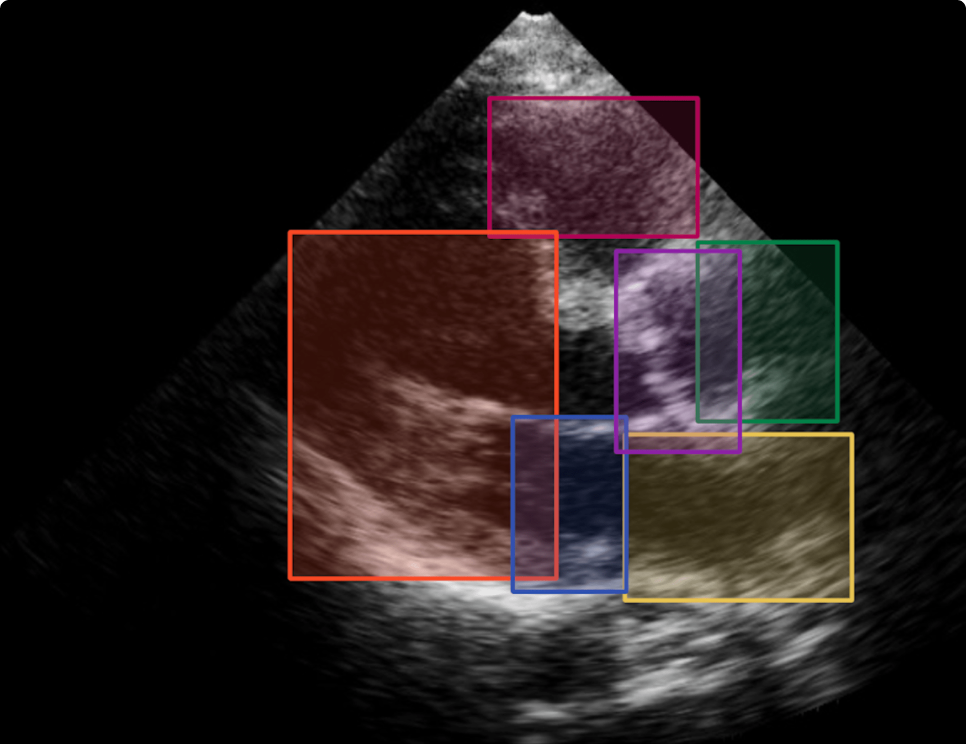

The challenge of quality control is complicated further because the definition of data quality varies based on their use case — and in specialized subjects, it can vary depending on the expert analyzing it.

As a specific example, in one healthcare use case, doctors disagreed about diagnoses based on tissue imagery up to 40% of the time — and an ML model with that level of accuracy would have a hard time obtaining FDA approval.

There are three typical methods of quality control that ML teams use:

- Consensus involves collecting multiple “votes” for how one data point is labeled

- Benchmarking involves randomly quizzing labelers to ensure attentiveness and accuracy

- A review system adds an extra quality assessment step to the labeling “assembly line”. After the labelers complete their work, it’s checked over by a separate team of reviewers

To get around the issue of differing diagnoses, the ML team needs to implement a quality tool like consensus — where each piece of training data is labeled by multiple expert physicians to account for their different perspectives and create a higher quality ground truth.

The Labelbox solution: queueing and quality control tools

Labelbox engineers addressed these challenges in one of their earliest innovations: the queueing system. This system enables both collaboration and quality control measures. Datasets are moved to the cloud and automatically shared among team members in real time so that everyone can collaborate asynchronously.

Labelbox also gives ML teams the tools to do effective quality management, ensuring that labels meet their own specialized requirements, rather than a more general standard. It can quiz labelers randomly to meet benchmarking requirements, as well as enable consensus. In the case of differing diagnoses, for example, the queueing system will ensure that each data point has been labeled by the necessary number of experts.

“There is no silver bullet for quality, because quality in itself is a...subjective term. But what AI teams want is to have the tools and workflows to administer the policy of the quality that they see fit.” — Manu Sharma, CEO and Founder, Labelbox

Labelbox provides a comprehensive suite of quality control tools that help teams meet their own quality standards.

To learn more about the challenges that Labelbox engineers solve for machine learning teams, we invite you to listen to the Software Engineering Daily podcast episode. If these types of technical challenges interest you, please check out our Careers page — we are hiring for many roles in engineering, support, and more.

All blog posts

All blog posts