Deep learning for live cell shape detection

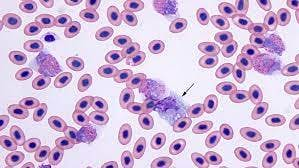

Summary: Researchers from Iowa State University were looking to advance atomic force microscopy (AFM) in order to provide a platform for high-resolution topographical imaging. AFM is used as mechanical characterization in a wide range of samples, including live cells, proteins, and other biomolecules. It is also instrumental for measuring interaction forces and binding kinetics for protein–protein or receptor–ligand interactions on live cells at a single-molecule level.

Challenge: The difficulty lies in performing force measurements and high-resolution imaging with AFM and data analytics because it is time-consuming and require special skill sets and continuous human supervision. Recently, researchers have explored the applications of using artificial intelligence (AI) and deep learning (DL) in the bioimaging field. However, the applications of AI to AFM operations for live-cell characterization are little-known until now.

Findings: The researchers implemented a deep learning framework to perform automatic sample selection based on the cell shape for AFM probe navigation during AFM biomechanical mapping. They established a closed-loop scanner trajectory control for measuring multiple cell samples at high speed for automated navigation. With this, they achieved a 60× speed-up in AFM navigation and reduced the time involved in searching for the particular cell shape in a large sample. Their innovation directly applies to many bio-AFM applications with AI-guided intelligent automation through image data analysis together with smart navigation.

How Labelbox was used: The researchers leveraged Labelbox to label their data and enabled experts to annotate the cell shape by drawing bounding boxes around it and labeling it with an accurate shape. Collecting these images was time-consuming and tedious as the user had to manually scan the cell samples and capture the images. In addition, performing the annotations, especially on low-quality images, was a painstaking task, leading to a smaller dataset with fewer annotated images. To address this challenge, they implemented data augmentation techniques on the fly (during training), which involved rotating the original images by 90◦ clockwise or counter-clockwise, by 180◦ , flipping them upside down, and by left-right mirroring. This enhanced the original dataset with more data samples with different orientations, which further made the DL network robust to the variety of cell shape orientations encountered during inference.

Read the full PDF here.

All posts

All posts