A benchmark for long-form medical question answering

Researchers from Dartmouth University recently advanced the need for comprehensive evaluation benchmarks for large language models (LLMs) in the medical domain. Existing benchmarks often rely on automatic metrics and multiple-choice questions, which do not fully capture the complexities of real-world clinical applications. To bridge this gap, the authors introduced a publicly available benchmark comprising real-world consumer medical questions, with long-form answers evaluated by medical professionals. This resource aims to facilitate more accurate assessments of LLMs' performance in medical question answering.

Research area and challenges

- Lack of comprehensive benchmarks: Existing evaluations focus on automatic metrics and multiple-choice formats, which do not adequately reflect the nuances of clinical scenarios.

- Closed-source studies: Many studies on long-form medical QA are not publicly accessible, limiting reproducibility and the enhancement of existing baselines.

- Absence of human annotations: There's a scarcity of datasets with human medical expert annotations, hindering the development of reliable evaluation metrics.

How Labelbox was used: To build a dataset of real-world medical questions, 4,271 queries were collected from the Lavita Medical AI Assist platform between spanning 1,693 conversations (single-turn and multi-turn). After deduplication, removal of sample questions, and filtering non-English entries using Lingua, the dataset was refined to 2,698 unique queries.

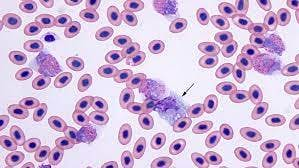

Pairwise annotation tasks were completed using Labelbox's annotation platform and human evaluations were conducted with a group of 3 medical doctors, with two doctors assigned per batch, specializing in radiology and pathology.

Before starting the main round of annotations, the researchers shared the annotation scheme with the doctors and conducted a trial round with each on a small sample of questions. They then gathered the doctors’ feedback to ensure that all annotation criteria were clear and that there was no ambiguity regarding the instructions. After confirming clarity and receiving approval from the doctors, they proceeded with the main batches of annotations.

LLM-as-a-Judge

The researchers designed their LLM-as-a-judge prompt by combining templates from Zheng et al. and WildBench. The prompt, shown below, enables pairwise comparison of responses across multiple criteria, and used GPT-4o-2024-08-06 and Claude-3-5-Sonnet-20241022 as judges.

Key findings

- Open LLMs' potential: Preliminary results indicated that open-source LLMs exhibit strong performance in medical QA, comparable to leading closed models.

- Alignment with human judgments: The research included a comprehensive analysis of LLMs acting as judges, revealing significant alignment between human evaluations and LLM assessments.

- Publicly available benchmark: The authors provided a new benchmark featuring real-world medical questions and expert-annotated long-form answers, promoting transparency and reproducibility in future research.

This paper has been submitted to AIM-FM: Advancements in Medical Foundation Models Workshop at NeurIPS 2024. You can read the full paper here.

All posts

All posts