Labelbox•April 1, 2021

How Move.ai is revolutionizing video content creation

Creating high quality video content, whether it’s a movie, a video game, or a live broadcast, is typically cost-prohibitive. The best content is made by the studios with the best equipment and animation talent. Now however, an emerging AI company, Move.ai, is set to change the status quo, making processes like motion capture and key point recognition easier, faster, and less expensive — for individuals and studios alike.

Markerless motion capture

Usually, technologies that track and gather motion capture data require markers — that’s why behind-the-scenes footage of action scenes, for example, feature actors in a motion capture suit. These suits can be bulky and costly, and even high quality markers struggle to capture detailed data, like the way an object or person interacts with the floor.

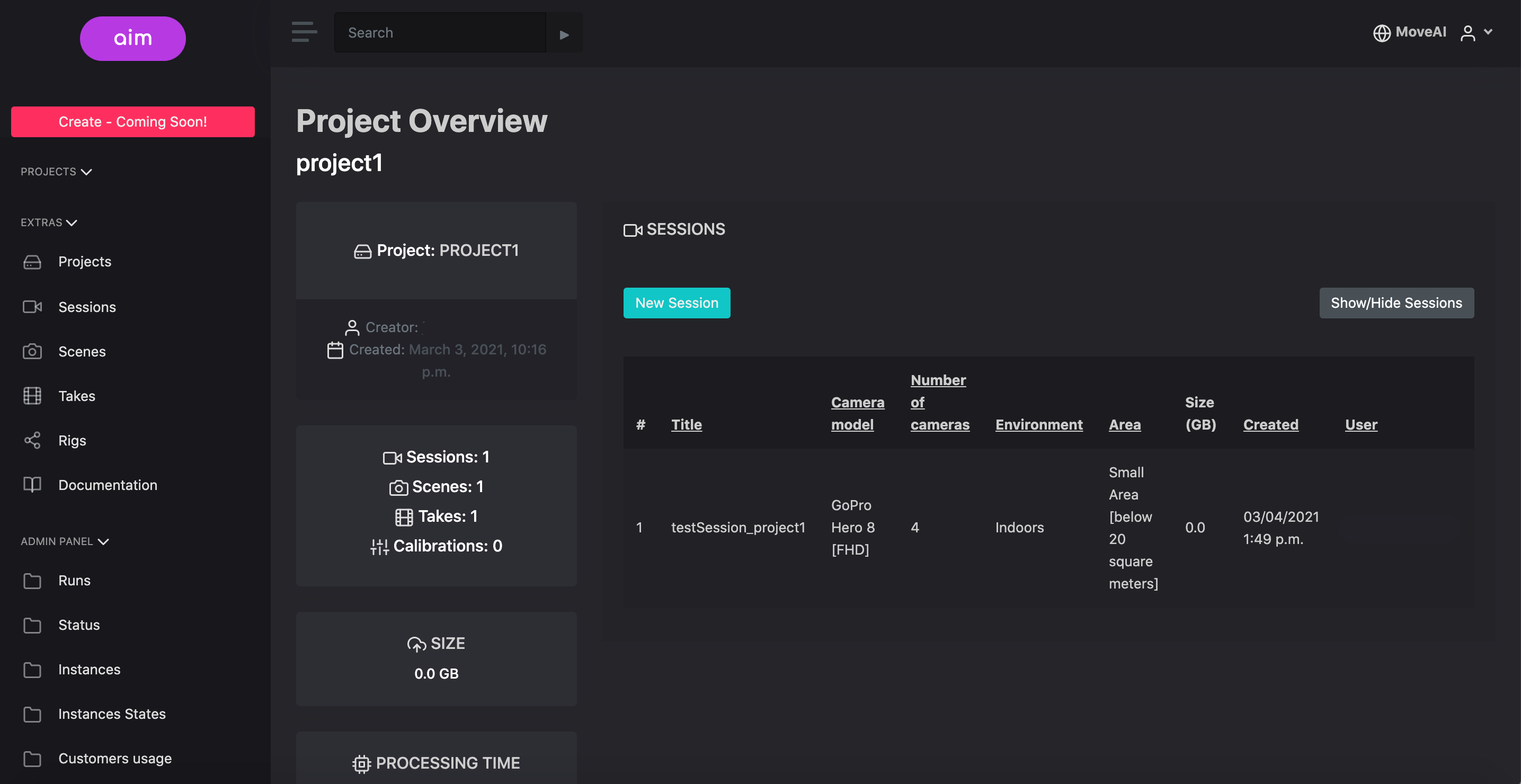

Move.ai’s markerless motion capture technology, called aim, uses computer vision algorithms to extract granular data around the actions of the person or object in a video. One client, for example, uses this technology to analyze soccer matches and provide detailed reports on each player, including strengths, weaknesses, and an individualized coaching plan for improvement.

While aim was originally provided as a service — clients would send them video footage and receive the relevant data via cloud — Move.ai is now releasing it as a web application so that studios can process their videos, extract motion data, and use it in their graphic or game engine. The aim web application, which is launching on April 1st, 2021, will enable faster iteration and faster turnaround for creatives, as well as democratize the creation of high quality video and graphics content.

Depth keying

Move.ai also provides an AI depth keying tool, called ai key, which enables creators to capture the video of one target object or person and change the rest of the area on screen. Creators can use it as a cost-effective (not to mention less equipment-intensive) alternative to a green screen projection. This tool lets creators not only to change the background of an image, but the foreground as well, enabling them to easily project the target within an extended reality environment. A news studio using this technology, for example, could broadcast multiple presenters in different locations on one screen and make it look to viewers as though they are presenting side by side.

Training algorithms quickly

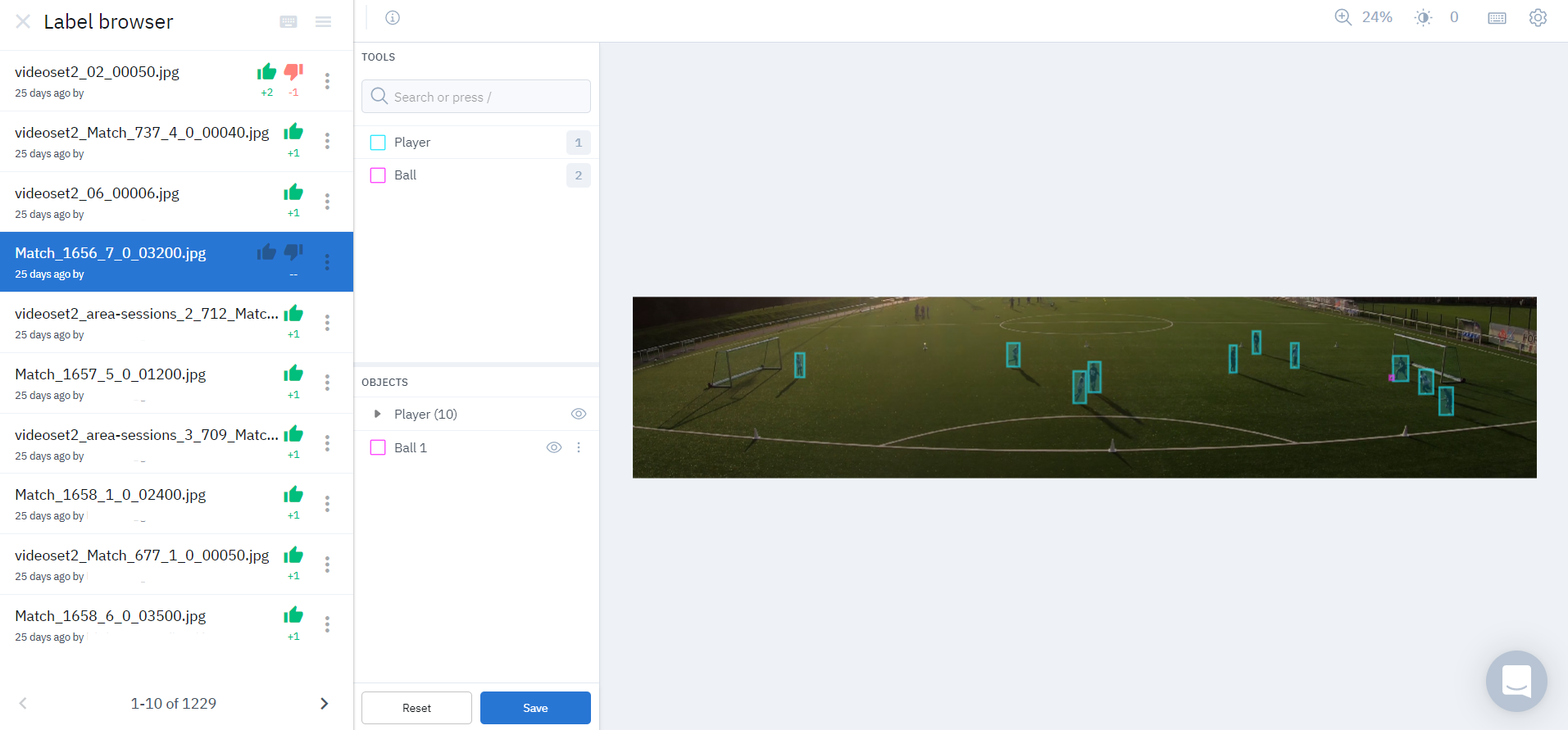

These breakthrough technologies are poised to transform the world of entertainment, but they also present Move.ai with a unique set of challenges when training their AI models. Their markerless motion capture solution, for example, requires algorithms trained to not only track a target across keyframes, but also identifies that person or object, identifies the people and object that come into contact with this target, and extracts the spatial data of the area captured within the frame. The AI application needs to accurately identify people and classifications on person (e.g. a number on a jersey), and recognize human limbs at different distances, in different poses, and occluded by other limbs or objects.

Because Move.ai offers several AI-powered solutions, they have a variety of use cases and algorithms that require training data. The team turned to Labelbox to help them spin up a labeling team, develop training data for their models, and iterate — all at top speed. Labelbox's dedicated workforce team also provided a high standard of accuracy and quick responses from the account team.

“What Labelbox has massively helped us with is fast iterations on our algorithms, helping us move twice as fast in this domain. The results we received from it are magnificent and their labeling user interface is the best we’ve seen for supporting our annotations efforts.”

— Mark Endemano, CEO of Move.ai

Labelbox's training data platform is designed to help AI teams like Move.ai quickly set up a labeling pipeline that connects seamlessly with the rest of their ML operations and trains multiple algorithms, fast. After annotating their data in Labelbox, the Move.ai team exports their labeled data into Tensorflow or PyTorch frameworks to train their model and leverages TensorRT for production. All of this is complemented with powerful GPUs for effective AI computation. The team is currently harnessing Nvidia RTX GPUs for research, while using Nvidia T4s for production.

Learn more about their products at move.ai. We’re excited to follow the breakthroughs they will enable with access to better training data. Download our Training Data Platform 101 guide to learn how you can also accelerate your AI model to production with Labelbox.

All blog posts

All blog posts