Labelbox•June 8, 2020

All hands on deck: A student team's annual quest to build the world's best autonomous underwater robot with AI

The annual RoboSub competition is held in San Diego where student teams from around the world design and build robotic submarines, otherwise known as Autonomous Underwater Vehicles (AUVs) to demonstrate their autonomy by completing underwater tasks.

The competition consists of a timed obstacle course using real-life tasks that tests what an AUV could do such as following pipelines, navigating through underwater gates, mapping buoys, launching torpedo-shaped markers, and more. Each of these tasks requires swift movements and precise navigation so in order to achieve all these tasks, the vehicle must be able to sense its environment.

The RoboSub competition is a great training ground for the next generation of machine learning practitioners as the applications for these experimental AUVs mimic those of real-world systems, currently deployed around the world for underwater exploration, seafloor mapping, and sonar localization.

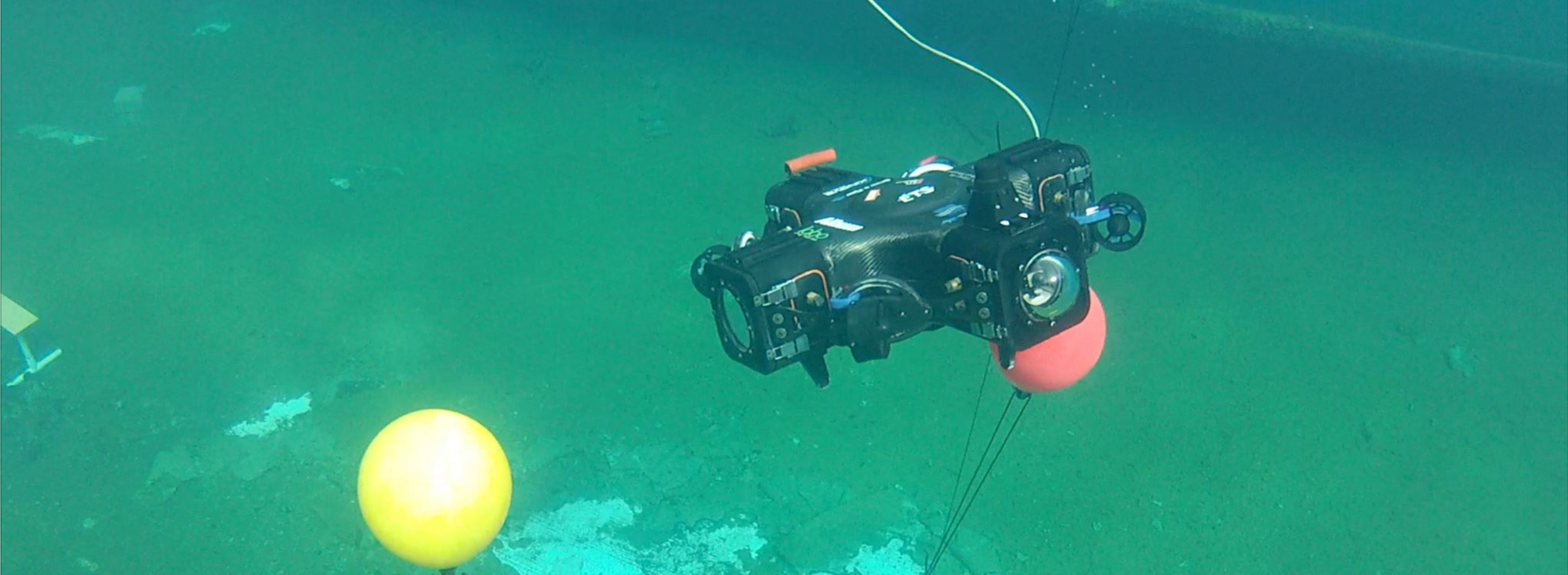

RobSub AUV - Illustrative clip from past competitions

Since the earliest days of the company, Labelbox has been powering RoboSub teams as it aligns with our core mission of helping organizations advance machine learning. It’s particularly exciting when we find teams leveraging AI in ways that are comparable to some of our large customers. Let’s dive in and take a look!

Meet the SONIA Team.

For the past 21 years, the SONIA team based in Montreal, Quebec has competed with over 20 engineering students on their team and have consistently ranked in the top spots, even taking home the championship spot in 2011. Each year, their team designs, builds and tests the sub-systems of their AUV with the goal of winning the annual Robosub event. Examples of missions from past competitions include identifying and touching buoys, dropping markers, localizing the AUV to a position using acoustic beacons (pingers) and then manipulating or deploying projectiles and moving/releasing objects before surfacing.

Martin is the mastermind behind Team SONIA’s deep learning implementation. It’s essential for an AUV to interact well with its environment and Martin believed that while equipping their AUV with accurate sensors and actuators is highly important, one of the main competitive advantages to winning is having performant machine learning models and software.

Going into the competition, Martin and the team had high hopes for taking home one of the top spots. As a points-based competition, the team that gets the most points wins the bragging rights and prize money and the SONIA team felt confident in their ability to put up a strong showing.

To give you an idea of the technology involved, their AUV is equipped with two cameras, a Doppler Velocity Log (DVL), an Inertial Measurement Unit (IMU), four hydrophones and a Mechanical Imaging Sonar. These sensors enable the vehicle to hear, see and measure its speed, acceleration and underwater position. It can also accurately measure the precise distance of objects ahead. In order to interact with its environment, the AUV is equipped with six thrusters, an active grabbing system and even a torpedo launcher!

The fundamental goal of the mission was for each AUV to demonstrate its autonomy by fulfilling various games of chance. Embarking to San Diego, the SONIA team thought that they already had a good amount of training data for the Dice and the Roulette challenges given initial tests showed that their models were detecting objects pretty well without any data from San Diego Transdec, which is the actual physical venue and underwater acoustic test facility where the competition is held. Using Labelbox’s free academic license (link) for student research and competitions also provided a convenient cloud-based tool for creating and managing all of their training data.

Three weeks before the event, the competition's Cash In objective (which required an AUV to drop physical casino chips into an area) was changed before the competition. This meant that the SONIA team had to scrap all of their initial datasets since the objects they had initially labeled were too far from reality. In the days leading up to the competition, Martin and his team came up with a solution which was to create more training data, adding around 1,500 labels for a total of 10,000-11,000 images of each dice and the roulette using Labelbox.

Overall, the deep learning implementations and high-quality training data from using Labelbox helped the SONIA team a lot in achieving one of the top spots (top 3) in the competition and something which they were very proud of.

To improve their chances of winning future events, Martin and his team have focused on process automation. They are using Apache-Airflow to automate their machine learning pipeline and their data transformation pipelines (ETL) are now fully automated.

Here’s a shortlist of their ML workflow as Martin described it to us:

- Extraction of images from raw data from their Robotic Operating System (ROS).

- Labelbox project creation using the GraphQL API to import unlabeled images for annotation.

- Use of Labelbox’s distributed labeling features to allow the SONIA team to collaborate quickly from different locations and to meet their tight project timelines.

- Extraction of labeled images and annotations from Labelbox.

- Transformation of the extracted images and annotation into Tensorflow (TF) records format.

- Training and tuning of hyperparameters and configuration.

- Training of their model using the Tensorflow Object Detection API. The training time needed was about 5 hours per model using an NVIDIA P100 GPU Accelerator from Google Cloud.

The SONIA team is committed to the advancement of robotics and offering an educational environment. You can check out their source project on Github here: (Link). We’re also particularly impressed that they have given us some great feedback and suggestions for Labelbox as well.

We’ll be cheering for the SONIA team as they embark on future competitions!

All blog posts

All blog posts